Roger Clarke's Web-Site

© Xamax Consultancy Pty Ltd, 1995-2024

Infrastructure

& Privacy

Matilda

Roger Clarke's Web-Site© Xamax Consultancy Pty Ltd, 1995-2024 |

|

|||||

| HOME | eBusiness |

Information Infrastructure |

Dataveillance & Privacy |

Identity Matters | Other Topics | |

| What's New |

Waltzing Matilda | Advanced Site-Search | ||||

Version of 8 March 2024

© Xamax Consultancy Pty Ltd, 2023-24

Available under an AEShareNet ![]() licence or a Creative

Commons

licence or a Creative

Commons  licence.

licence.

This document is at http://rogerclarke.com/ID/PGTAz.html

It supersedes the version of 22 October 2023, at http://rogerclarke.com/ID/PGTAz-231022.html, having added the section 'Application of the Model'

A shortened version was presented at ACIS'23, Wellington, 5-8 December 2023

The full slide-set is at http://rogerclarke.com/ID/PGTAz.pdf

The short slide-set for ACIS is at http://rogerclarke.com/ID/PGTAz-ACIS.pdf

The term Authorization refers to a key element within the process whereby control is exercised over access to information and communications technology resources. It involves the assignment of a set of permissions or privileges to particular users, or categories of users. A description is provided of the conventional approach adopted to Authorization. The management of human users is still far from satisfactory, and devices and processes are posing additional challenges as they increasingly act directly on the real world. Applying a previously-published pragmatic metatheoretic model that provides a basis for information systems practice, this paper presents a generic theory of Authorization. The conventional approach to Authorization is re-examined in light of the new theory, weaknesses are identified, and improvements proposed.

Information and Communications Technology (ICT) facilities have become central to the activities not only of organisations, but also of communities, groups and individuals. The end-points of networks are pervasive, and so is the dependence of all parties on the resources that the facilities provide access to. ICT has also moved beyond the processing of data and its use of the production of information. Support for inferencing has become progressively more sophisticated, some forms of decision-making are being automated, and there is increasing delegation to artefacts of the scope for autonomous action in the real world. Humanity's increasing reliance on machine-readable data, on computer-based data processing, and on inferencing, decision and action, is giving rise to a high degree of vulnerability and fragility, because of the scope for misuse, interference, compromise and appropriation. There is accordingly a critical need for effective management of access to ICT-based facilities.

Conventional approaches within the ICT industry have emerged and matured over the last half-century. Terms in common usage in the area include identity management (IdM), identification, authentication, authorization and access control. The adequacy of current techniques has been in considerable doubt throughout the first two decades of the present century. A pandemic of data breaches has spawned notification obligations in many jurisdictions since the first Security Breach Notification Law was enacted in California in 2003 (Karyda & Mitrou 2016), and the resources of many organisations have proven to be susceptible to unauthorised access (ITG 2023).

I contend that many of the weaknesses in the relevant techniques arise from inadequacies in the conventional conception of the problem-domain, and in the models underlying architectural, infrastructural and procedural designs to support authorization. My motivation in conducting the research reported here has been to contribute to improved information systems (IS) practice and practice-oriented IS research. The method adopted is to identify and address key weaknesses, by applying and extending a previously-published pragmatic metatheoretic model.

The paper commences by reviewing the context and nature of the authorization process, within its broader context of identity management. This culminates in initial observations on issues that are relevant to the vulnerability to unauthorised access. An outline is then provided of a pragmatic metatheoretic model, highlighting the aspects of relevance to the analysis. A generic theory of authorization is proposed, which reflects the insights of the model. This lays the foundations for adaptations to IS theory and practice in all aspects of identity management, including identification and authentication, with particular emphasis placed in this paper on authorization and access control. That theory is then used as a lens whereby weaknesses in conventional authorization theory and practice can be identified and articulated.

A dictionary definition of authorization is "The action of authorizing a person or thing ..." (OED 1); and authorize means "To give official permission for or formal approval to (an action, undertaking, etc.); to approve, sanction" (OED 3a) or "To give (a person or agent) legal or formal authority (to do something); to give formal permission to; to empower" (OED 3b). OED also recognises uses of 'authorization' to refer to " ... formal permission or approval" (OED 1), i.e. to the result of an authorization process. That creates unnecessary linguistic confusion. This paper avoids that ambiguity, by using 'permission' or 'privilege' to refer to the result of an authorization process.

The remainder of this section outlines conventional usage of the term within the ICT industry, with an emphasis on the underpinnings provided by industry standards organisations, clarifies several aspects of those standards, summarises the various approaches in current use, and highlights a couple of aspects that need some further articulation.

The authorization notion was first applied to computers, data communications and IS in the 1960s. It has of course developed considerably since then, both deepening and passing through multiple phases. However it has mostly been treated as being synonymous with the selective restriction of access to a resource, an idea usefully referred to as 'access control'. Originally, the resource being accessed was conceived as a physical area such as enclosed land, a building or a room; but, in the context of ICT, a resource is data, software, a device or a communications link.

The following quotations and paraphrases provide short statements about the nature of the concept as it has been practised in ICT during the period c.1970 to 2020:

Authorization is a process for granting approval to a system entity to access a system resource (RFC4949 2007, at 1b(I), p.29)

Access control or authorization ... is the decision to permit or deny a subject access to system objects (network, data, application, service, etc.) (NIST800-162 2014, p.2)

Josang (2017, pp.135-142) draws attention to ambiguities in mainstream definitions in all of the ISO/IEC 27000 series, the X.800 Security Architecture, and the NIST Guide to Attribute Based Access Control (ABAC). To overcome the problems, he distinguishes between:

In standards documents, the terms 'subject' and 'system resource / object' are intentionally generic. NIST800-162 (2014, p.3) refers to an 'object' as "an entity to be protected from unauthorized use" . Examples of IS resources referred to in that document include "a file" (p.vii), "network, data, application, service" (p.2), "devices, files, records, tables, processes, programs, networks, or domains containing or receiving information; ... anything upon which an operation may be performed by a subject including data, applications, services, devices, and networks" (p.7), "documents" (p.9), and "operating systems, applications, data services, and database management systems" (p.20). For the present purposes 'actor' and 'IS resource' better convey the scope, and are adopted in this paper. Drawing on NIST800-162 (2014, pp.2-3):

Actor means any Real-World Thing capable of action on an IS Resource, including humans and some categories of artefact

IS Resource means Data or a Process in the Abstract World, that an IS is capable of acting upon

Permissions declare allowed actions by an Actor. (NIST uses the terms 'privileges' and 'authorizations', and IETF uses 'authorization'). Permissions are defined by an authority and embodied in policy or rules. In IETF's RFC4949 (2007), for example, a permission is "An approval that is granted to [an Actor] to access [an IS Resource]" (1a(I)). "... "The semantics and granularity of [permissions] depend on the application and implementation ... [A permission] may specify a particular access mode -- such as read, write, or execute -- for one or more system resources" (p.29).

The following are adopted as working definitions of the key terms, for refinement at a later stage in this paper:

Authorization is the process whereby a decision is made to declare that an Actor has Permission to perform an action on an IS Resource. The result of an Authorization process is a statement of the following form:

<An Actor> has one or more <Permissions> in relation to <an IS Resource>

Access Control is the process whereby (a) means are provided to enable an authorized Actor to exercise their permissions, and (b) unauthorised Actors are precluded from doing so

Permission means an entitlement or authority to be provided with the capability to perform a particular act in relation to a particular IS Resource

Actions may take the form of operations on Data, in particular data access, data input, data amendment, data deletion, data processing, or data inferencing; or the triggering of a process in relation to IS Resources.

Authorization processes depend on reliable information. Identity Management (IdM) and Identity and Access Management (IAM) are ICT-industry terms for frameworks comprising architecture, infrastructure and processes that enable the management of user identification, authentication, authorization and access control processes. IdM was an active area of development c.2000-05, and has been the subject of a considerable amount of standardisation, in particular in the ISO/IEC 24760 series (originally of 2011, completed by 2019). A definition provided by the Gartner consultancy is:

Identity management ... concerns the governance and administration of a unique digital representation of a user, including all associated attributes and entitlements (Gartner, extracted 29 Mar 2023, emphasis added)

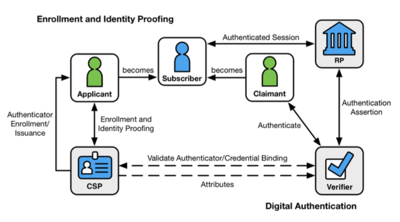

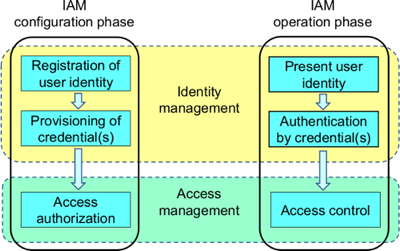

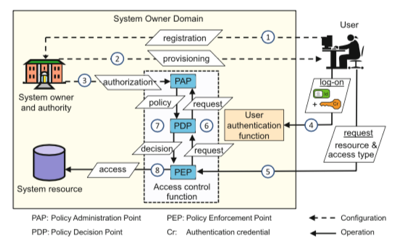

The process flow specified in NIST-800-63-3 (2017, p.10) is in Figure 1. This is insufficiently precise to ensure effective and consistent application. Josang (2017, p.137, Fig. 1) provides a better-articulated overview of the functions, reproduced in Figure 2. This distinguishes the configuration (or establishment) phase from the operational activities of each of Identification, Authentication and Access. This is complemented by a mainstream scenario (Josang 2017, p.143, Fig. 2) that illustrates the practical application of the concepts, and is reproduced in Figure 3.

Extracted from NIST-800-63-3 2017 (p.10)

Extracted from

https://en.wikipedia.org/wiki/File:Fig-IAM-phases.png

See

also Josang (2017, p.137), Fig. 1

Extracted from Josang (2017, p.143), Fig. 2

The IdM industry long had a fixation on public key encryption, and particularly X.509 digital certificates. This grew out of single-signon facilities for multiple services within a single organisation, with the approach then being generalised to serve the needs of multiple organisations. The inadequacies of monolithic schemes gave way to federation across diverse schemes by means of common message standards and transmission protocols. Multiple alternative approaches are adopted on the supply side (Josang & Pope 2005). These are complemented and challenged by approaches on the demand-side that reject the dominance of the interests of corporations and government agencies and seek to also protect the interests of users. These approaches include user-selected intermediaries, own-device as identity manager, and nymity services (Clarke 2004).

The explosion in user-devices (desktops from c.1980, laptops from c.1990, mobile-phones from 2007 and tablets from 2010) has resulted in the present context in which two separate but interacting processes are commonly involved. Individuals authenticate locally to their personal device using any of several techniques designed for that purpose; and the device authenticates itself to the targeted service(s) through a federated, cryptography-based scheme (FIDO 2022). The Identity Management model is revisited in the later sections of this paper.

Whether a request is granted or denied is determined by an authority. In doing so, the authority applies decision criteria. From the 1960s onwards, a concept of Mandatory Access Control (MAC) has existed, originating within the US Department of Defense. Instances of data are assigned a security-level, each user is assigned a security-clearance-level, and processes are put in place whose purposes are to enable user access to data for which they have a requisite clearance-level, and to disable access in relation to all other data. The security-level notion is not an effective mechanism for IS generally. Instead, the criteria may be based on any of the following (with particular models of Access Control listed for each of the alternatives, and outlined below):

An early approach of general application was Discretionary Access Control (DAC), which restricts access to IS Resources based on the identity of users who are trying to access them (although it may also provide each user with the power, or 'discretion', to delegate access to others). DAC matured into Identity Based Access Control (IBAC), which employs mechanisms such as access control lists (ACLs) to manage the identities of Actors allowed to access which IS Resources. The Authorization process assumes that those identities have been authenticated. Because of that dependency, aspects of authentication are discussed later in this paper.

IBAC is effective in many circumstances, and remains much-used. It scales poorly, however, and large organisations have sought greater efficiency in managing access. From the period 1992-96 onwards, Role Based Access Control (RBAC), became mainstream in large systems (Pernul 1995, Sandhu et al. 1996, Lupu & Sloman 1997, ANSI 2012). In such schemes, an actor has access to an IS resource based on a role they are assigned to. This offers efficiency where there are significant numbers of individuals performing essentially the same functions, whether all at once, or over an extended period of time. Application of RBAC in the highly complex setting of health data is described by Blobel (2004). See also ISO 22600 Parts 1 and 2 (2014). Blobel provides examples of roles, including (p.254):

Two significant weaknesses of RBAC are that role is a construct and lacks the granularity needed in some contexts, and that environmental factors are excluded. To address those weakness, Attribute Based Access Control (ABAC) has emerged since c.2000 (Li et al. 2002). This grants or denies user requests based on attributes of the actor and/or IS resource, together with relevant environmental conditions: "ABAC ... controls access to [IS resources] by evaluating rules against the attributes of entities ([actor] and [IS resource]), operations, and the environment relevant to a request ... ABAC enables precise access control, which allows for a higher number of discrete inputs into an access control decision, providing a bigger set of possible combinations of those variables to reflect a larger and more definitive set of possible rules to express policies" (NIST-800-162 2014, p.vii). "Attribute-based access control (ABAC) [makes] it possible to overcome limitations of traditional role-based and discretionary access controls" (Schlaeger et al. 2007, p.814). This includes the capacity to be used with or without the requestor's identity being disclosed, by means of an "opaque user identifier" (p.823).

NIST800-162 provides, as examples of actor attributes, "name, unique identifier, role, clearance" (p.11), all of which relate to human users, and "current tasking, physical location, and the device from which a request is sent" (p.23). Examples of IS resource attributes given include "document ... title, an author, a date of creation, and a date of last edit, ... owning organization, intellectual property characteristics, export control classification, or security classification" (p.9). The examples of environmental conditions that are provided include "current date, time, [actor/IS resource] location, threat [level], and system status" (pp.24, 29).

Industry standards and protocols exist, to support implementation of Authorization processes, and to enable interoperability among organisationally and geographically distributed elements of information infrastructure. Two primary examples are OASIS SAML (Security Assertion Markup Language), a syntax specification for assertions about a actor, supporting the Authentication of Identity and Attribute Assertions, and Authorization; and OASIS XACML (eXtensible Access Control Markup Language), which provides support for Authorization processes at a finer level of granularity.

A more fine-grained approach is adopted by Task-Based Access Control (TBAC), which associates permissions with a task, e.g. in a government agency that administers welfare payments, with a case-identifier; and in an incident management system, with an incident-report identifier; in each instance combined with some trigger such as a request by the person to whom the data relates (the 'data-subject'), or an allocation to an individual staff-member by a workflow algorithm. See (Thomas & Sandhu 1997, Fischer-Huebner 2001, p.160). To date, TBAC appears to have achieved very limited adoption.

The present paper treats the following aspects as being for the most part out-of-scope:

However, the following two aspects are relevant to the analysis that follows.

The above broad description of the conventional approach to Authorization adopts an open interpretation of the IS resource in respect of which a actor is granted permissions. In respect of processes, a permission might apply to all available functions, or each function (e.g. view data, create data, amend data, delete data) may be the subject of a separate permission. In respect of data, a hierarchy exists. For example, a structured database may contain data-files, each of which contains data-records, each of which contains data-items. At the level of data-files, the Unix file-system, for example, distinguishes two different permissions, (a) read and (b) write, with write implying all of create, amend, delete and rename.

A permission may apply to all data-records in a data-file, but it may apply to only some, based on criteria such as a record-identifier, or the content of individual data-items. Hence, visualising a data-file as a table, a permission may exclude some rows (records) and/or some columns (data-items). There is a modest literature on the granularity of data-access permissions, e.g. Karjoth et al. (2002), Zhong et al. (2011).

The Authorization process assumes the existence of an authority that can and does make decisions about whether to grant actors permissions in relation to IS resources. For the most part, the authority is simply assumed to be the operator that manages the relevant data-holdings and/or exercises access control over those data-holdings. However, contexts exist in which the authority is some other party. One example is a regulatory agency. Another example is the entity to which the data relates. This is the case in schemes which include an opt-out facility at the option of that entity, and consent-based (also sometimes referred to as opt-in) schemes. The authority with respect to each record in the data-holdings is the entity to which it relates, and the system operator implements the criteria set by that entity.

An important example is in health-care settings, particularly in the case of highly-sensitive health data, such as that relating to mental health, and to sexually-transmitted diseases. A generic model is described in Clarke (2002, at 6.) and Coiera & Clarke (2004). In the simplest case, each individual has a choice between the following two criteria:

Further articulation might provide each individual with a choice between the following two criteria:

Each specific denial or consent is expressed in terms of specific attributes, which may define:

A fully articulated model supports a nested sequence of consent-denial or denial-consent pairs. These more complex alternatives enable a patient to have confidence that some categories of their health data are subject to access by a very limited set of treatment professionals. However, most of the conventional models lack the capacity to support consent as an Authorization decision criterion. An exception is the seldom-applied Task-Based Access Control (TBAC) approach (Thomas & Sandhu 1997, Fischer-Huebner 2001 p.160).

The next section outlines a model that has been devised to support IS practice and practice-oriented IS research. The following section extends the model to the field of Authorization.

In previously-published work (Clarke 2021, 2022, 2023a, 2023b), a model is proposed that reflects the viewpoint adopted by IS practitioners, and is designed to support understanding of and improvements to IS practice and practice-oriented IS research. The model embodies the socio-technical system view, whereby organisations are recognised as comprising people using technology, each affecting the other, with effective design depending on integration of the two. The model is 'pragmatic', as that term is used in philosophy, that is to say it is concerned with understanding and action, rather than merely with describing and representing. It is also 'metatheoretic' (Myers 2018, Cuellar 2020), on the basis that it builds on a working set of assumptions in each of the areas of ontology, epistemology and axiology. Capitalised terms are defined in the text, and all definitions are reproduced in an associated Glossary.

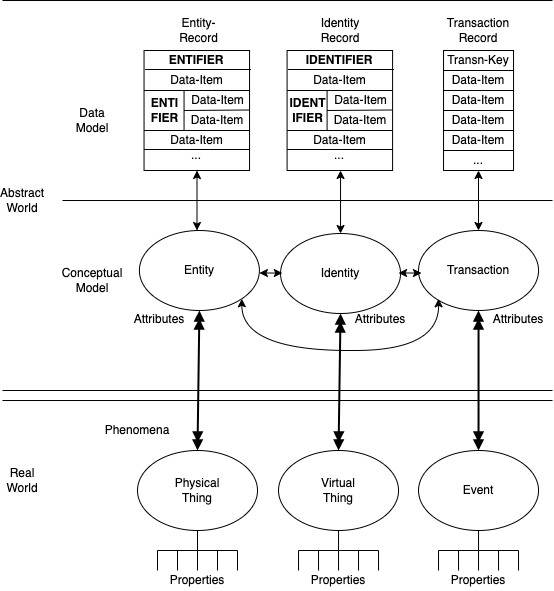

As depicted in Figure 4, the Pragmatic Metatheoretical Model (PMM) distinguishes a Real World from an Abstract World. The Real World comprises Things and Events, which have Properties. These can be sensed by humans and artefacts with varying reliability. Abstract Worlds are depicted as being modelled at two levels. The Conceptual Model level reflects the modeller's perception of Real World Phenomena. At this level, the notions of Entity and Identity correspond to the category Things, and Transaction to the category Events. Authentication is a process whereby the reliability of the model's reflection of reality can be assessed.

A vital aspect of the model is the distinction between Entity and Identity. An Entity corresponds with a Physical Thing. An Identity, on the other hand, corresponds to a Virtual Thing, which is a particular presentation of a Physical Thing, most commonly when it performs a particular role, or adopts a particular pattern of behaviour. For example, the NIST (2006) definition of Authentication distinguishes a "device" (in the terms of this model, an Entity) from a "process" (an Identity), and the Gartner definition of IdM refers to "a digital representation [an Identity] of a user [an Entity]". An Entity may adopt one Identity in respect of each role it performs, or it may use the same Identity when performing multiple roles. An (Id)Entity-Instance is a particular occurrence of an (Id)Entity. For example, within a corporation, over time, different human Entity-Instances adopt the Identity of CEO, whereas the Identity of Company Director is adopted by multiple human Entity-Instances at the same time, each of them being an Identity-Instance.

A further notion that assists in understanding models of human beings is the Digital Persona. This means, conceptually, a model of an individual's public personality based on Data and maintained by Transactions, and intended for use as a proxy for the individual; and, operationally, a Data-Record that is sufficiently rich to provide the record-holder with an adequate image of the represented Entity or Identity. A Digital Persona may be Projected by the Entity using it, or Imposed by some other party, such as an employer, a marketing corporation, or a government agency (Clarke 1994a, 2014). As used in conventional IdM, an Identity, "a unique digital representation of a user" is an Imposed Digital Persona.

The Data Model Level enables the operationalisation of the relatively abstract ideas in the Conceptual Model level. This moves beyond a design framework to fit with data-modelling and data management techniques and tools, and to enable specific operations to be performed to support organised activity. The PMM uses the term Information specifically for a sub-set of Data: that Data that has value (Davis 1974, p.32, Clarke 1992b, Weber 1997, p.59). Data has value in only very specific circumstances. Until it is in an appropriate context, Data is not Information, and once it ceases to be in such a context, Data ceases to be Information. Assertions are putative expressions of knowledge about one of more elements of the metatheoretic model.

The concepts in the preceding paragraphs declare the model's ontological and epistemological assumptions. A third relevant branch of philosophy is axiology, which deals with 'values'. The values in question are those of both the system sponsor and stakeholders. The stakeholders include human participants in the particular IS ('users'), but also those people who are affected even though they are not themselves participants ('usees' -- Berleur & Drumm 1991 p.388, Clarke 1992a, Fischer-Huebner & Lindskog 2001, Baumer 2015). The interests of users and usees are commonly in at least some degree of competition with those of social and economic collectives (groups, communities and societies of people), of the system sponsor, and of various corporations and government agencies. Generally, the interests of the most powerful of those players dominate.

The basic PMM was extended in Clarke (2022), by refining the Data Model notion of Record-Key to distinguish two further concepts: Identifiers as Record-Keys for Identities (corresponding to Virtual Things in the Real World), and Entifiers as Record-Keys for Entities (corresponding to Physical Things). A computer is an Entity, for which which a Processor-ID may exist, failing which its Entifier may be a proxy, such as a the Network Interface Card Identifier (NIC ID) of, say, an installed Ethernet card, or its IP-Address. A process is an Identity, for which a suitable Identifier is a Process-ID, or a proxy such as its IP-Address concatenated with its Port-Number. For human Entities, the primary form of Entifier is a biometric, although the Processor-ID of an embedded chip is another possibility (Clarke 1994b p.31, Michael & Michael 2014). For Identities (whether used by a human or an artefact), a UserID or LoginID is a widely-used proxy Identifier.

This leads to distinctions between Identification processes, which involve the provision or acquisition of an Identifier, and Entification processes, for which an Entifier is needed. The acquired (Id)Entifier can then be used as the Record-Key for a new Data-Record, or as the means whereby the (Id)Entity can be associated with a particular, already-existing (Id)Entity-Record. The terms 'Entifier' and 'Entification' are uncommon, but have been used by the author since 2001 and applied in about 25 articles within the Google Scholar catchment, which together have over 400 citations.

Two further papers extend the PMM in relation to Authentication. In Clarke (2023b), it is argued that the concept needs to encompass Assertions of all kinds, rather than just Assertions involving (Id)Entity. That paper presents a Generic Theory of Authentication (GTA), defining it as a process that establishes a degree of confidence in the reliability of an Assertion, based on Evidence. Each item of Evidence is referred to as an Authenticator. A Credential is a category of Authenticator that carries the imprimatur of some form of Authority. Various categories of Assertion are defined that may or may not involve (Id)Entity, including Assertions of Fact, Content Integrity and Value. A Token is a recording medium on which useful Data is stored. Examples of 'useful Data' in the current context include (Id)Entifiers, Authenticators and Credentials.

The second of those papers, (Clarke 2023a), defines an Assertion of (Id)Entity as a claim that a particular (Virtual or Physical) Thing is appropriately associated with one or more (Id)Entity-Records. An Assertion of (Id)Entity is subjected to (Id)Entity Authentication processes, in order to establish the reliability of the claim. Also of relevance is the concept of a Property Assertion, whereby a particular Data-Item-Value in a particular (Id)Entity Record is claimed to be appropriately associated with, and to reliably represent, a particular Property of a particular (Virtual or Physical) Thing. Properties, and (Id)Entity Attributes represented by Data-Items, are of many kinds. One of especial importance in commercial transactions is an Assertion of a Principal-Agent Relationship, whereby a claim is made that a particular (Virtual or Physical) Thing has the authority to act on behalf of another particular Thing. An agent may be a Physical Thing (a person or a device), or a Virtual Thing (a person currently performing a particular role, or a computer process).

The theory reviewed in this section is extended in the following section to encompass Authorization, in order to lay the foundation for an assessment of the suitability of the conventional approaches to Authorization described earlier in this paper.

This section presents a new Generic Theory of Authorization (GTAz), places it within the context of (Id)Entity Management (IdEM), and shows its relationships with the various other processes that make up the whole. The Theory applies the Pragmatic Metatheoretic Model (PMM) and the Generic Theory of Authentication (GTA), outlined above. It is first necessary to present a generic process model of (Id)Entity Management as a whole, and define terminology in a manner consistent with the PMM and GTA. Wherever practicable, conventional terms and conventional definitions are adopted, or at least elements of conventional definitions. However, the conventional model contains ambiguities, inconsistencies, and poor mappings to the Real World, all of which need to be avoided. As a result, many definitions and some terms are materially different from current industry norms and the current Standards documents. The final sub-section then addresses an omission from conventional Authorization theory. All definitions are reproduced in an associated Glossary (Clarke 2023c).

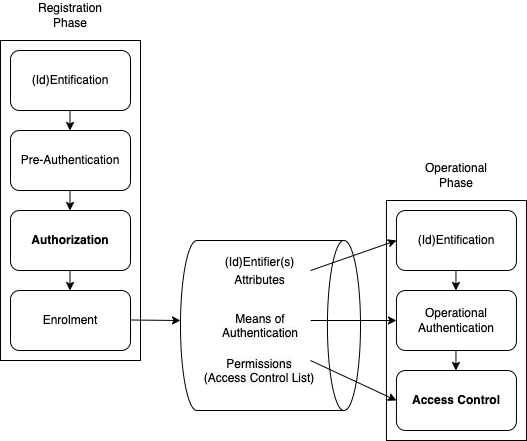

In Figure 2, Josang's (2017) Phase Model was reproduced. In Figure 5, Josang's Model is further refined, to provide a diagrammatic overview of the field as a whole, for which the overarching term (Id)Entity Management (IdEM) is used, and within which Registration and Operational Phases are distinguished.

The Registration Phase comprises:

The Operational Phase comprises:

The GTA outlined above provides definitions of the terms relevant to the preparatory steps of the Registration Phase:

Information System (or just System) means a set of interacting Data and processes that performs one or more functions involving the handling of Data and Information, such as data creation, editing, processing, storage and deletion; and information selection, filtering, aggregration, presentation and use

(Id)Entity Management (IdEM) means the architecture, infrastructure and processes whereby access to IS Resources is enabled for appropriate users, and otherwise denied

(Id)Entification means a process that necessarily involves the provision, acquisition or postulation of either an Identifier (for Identification) or an Entifier (for Entification); and that may also enable association with Data stored about that (Id)Entifier

(Id)Entifier means a set of Data-Items that are together sufficient to distinguish a particular (Id)Entity-Instance in the Abstract World

(Id)Entity-Instance means a particular instance of an (Id)Entity

(Id)Entity means an element of the Abstract World that represents a Real-World Physical Thing (in the case of an Entity) or Virtual Thing (in the case of an Identity)

Nym encompasses both an Identifier that cannot be associated with any particular Entity, whether from the Data itself or by combining it with other Data (an Anonym), and an Identifier that may be able to be associated with a particular Entity, but only if legal, organisational and technical constraints are overcome (a Pseudonym)

Pre-Authentication means a process that evaluates Evidence in order to establish a degree of confidence in the reliability of Assertions of (Id)Entity and of the appropriateness of providing that (Id)Entity with a Permission

Evidence means Data that assists in determining a level of confidence in the reliability of an Assertion

Evidence of (Id)Entity means one or more Authenticators used in relation to (Id)Entity Assertions. (The conventional term Proof of Identity - PoI - is deprecated)

Authenticator means an item of Evidence

Credential means an Authenticator that carries the imprimatur of some form of Authority

Authority means an Entity that is recognised as providing assurance regarding the reliability of an Authenticator

Examples of Authorities include government agencies that issue passports, drivers' licences and citizenship certificates; operators of databases of educational and trade qualifications and testamurs; and notaries.

Relying Party means an Entity that relies on Evidence that is purported to support an Assertion.

An Entity that creates or provides Evidence may or may not have responsibility at law to ensure its reliability or integrity. This is more likely to be the case if the Entity is an Authority that issues a Credential. Where a responsibility exists, an Entity might incur liability to the Relying Party in the event that the Entity fails to fulfil that responsibility.

Token means a recording medium on which useful Data is stored, such as one ore more (Id)Entifiers, Authenticators and/or Credentials

The third step of the Registration Phase depicted in Figure 5, Authorization, is the primary focus of this paper. Adopting the modified definitions in the IETF and NIST standards proposed in Josang (2007), a clear distinction is drawn between the Authorization process (discussed in this section) and Access Control (discussed in the following section). The following is a refinement of the working definition presented earlier in this paper:

Authorization means a process whereby an Authorization Authority decides whether or not to declare that an Actor has one or more Permissions in relation to a particular IS Resource. A Permission may be specific to an Actor, or the Actor may be assigned to a previously-defined Role and inherit Permissions associated with that Role

Authorization Authority means an Entity with legal or practical power (de juré or de facto) to determine whether and what Permissions a particular Actor has in relation to a particular IS Resource

Role means a coherent pattern of behaviour performed in a particular context

Categories of Role include:

The operator of an Information System, as principal, or the operator of an (Id)Entity Management service acting as an agent for a principal, is generally assumed to be the Authorization Authority. Many other possibilities exist, however, such as a regulatory agency, a professional registration board and an individual to whom personal data relates.

Actor was earlier defined to mean any Physical Thing or Virtual Thing capable of action on an IS Resource. An Actor may take the form of, in particular:

An Actor needs the capability to perform actions in relation to IS Resources, or an agent that can do so on the Actor's behalf. Many artefacts lack suitable actuators, but devices are increasingly being provided with capacity to act in the real world. Legal persons such as corporations, associations and trusts have no capacity to act, and depend on agents acting on their behalf.

Generally, an Actor can perform as principal, or as an agent for a principal or for another agent. A qualification to this relates to artefacts and processes running in artefacts. Legal regimes generally preclude artefacts from bearing responsibility for actions and outcomes, and in particular they cannot be subject to provisions of the criminal law or bound by contract. Artefacts can be a tool for a human Actor or a legal person, but cannot act as principal where an action on an IS resource involves legal consequences, such as responsibility for due care, or liability for harm arising from an action.

IS Resource was earlier defined as Data or a Process in the Abstract World, that is capable of being acted upon. An IS Resource may be defined at various levels of granularity. In particular:

The final step of the Registration Phase establishes the context in which the subsequent Operational Phase can be effectively but also efficiently performed:

Enrolment means a process that records Data to facilitate the performance of the Operational Phase of (Id)Entity Management

Account means the data-holdings or profile associated with an Actor or (Id)Entity-Instance for which an Authorization process has created a Permission

Depending on the approach adopted, the Enrolment process may need to perform some additional functions, such as the allocation of an Identifier, or the creation of an Authenticator.

The Registration Phase paves the way for Actors to be given powers to act, which are encapsulated in the entitlement called a Permission. The Operational Phase may be instigated at any time after Registration is complete, and as many times and as frequently as suits the circumstances. The first step, (Id)Entification, exhibits no material differences from the first step in the Registration Phase. The second step, Authentication, differs sufficiently from the Pre-Authentication process during the Registration Phase that a separate definition is needed:

Authentication means a process that evaluates Evidence in order to establish a degree of confidence in the reliability of an Id(E)ntity Assertion, such as one communicated as part of a Login process

The Authenticator(s) used in the Operational Phase may be the same as one or more of those used in the Pre-Authentication step of the Registration Phase. More commonly, however, an arrangement is implemented to achieve operational efficiency and user convenience. One approach of long standing is for a 'shared secret' (password, PIN, passphrase, etc.) to be nominated by the user, or provided to the user by the operator. Another mechanism is a one-time password (OTP) sent to the user when needed, via a separate and previously-agreed communications channel. A currently mainstream approach involves a one-time password generator installed on the user's device(s), or posted to them.

The third step, Access Control, is then able to be defined in a straightforward manner, as an element within the overall IdEM framework:

Access Control means a process that utilises previously recorded Permissions to establish a Session that enables a User to exercise the appropriate Permissions

Login means a process whereby an Actor communicates a request to exercise Permissions that have been granted to a particular (Id)Entity, which triggers an Authentication process, and, if successful, an Access Control process

Session means a period of time during which an authenticated Actor is able to exercise its Permissions in relation to particular IS Resources

User means a Real-World Thing associated with an (Id)Entifier commonly of a kind referred to as a userid, loginid or username, that is provided with the ability to exercise its Permissions to perform particular actions in relation to particular IS Resources

End User means a User that is provided Permissions for application purposes

System User means a User that is provided Permissions for system management purposes

Conventional Identity Management (IdM) is a creature of the ICT era, which grew out of the marriage of computing and communications. ICT as a whole has moved on, and systems are increasingly capable of action in the Real World, by means of actuators under programmatic control. Manifestations include Supervisory Control and Data Acquisition (SCADA), Industrial Control Systems (ICS), mechatronics, robotics, and the Internet of Things (IoT). A contemporary Generic Theory of Authorization requires extension beyond IS Resources (in their conventional sense of data and processes), to also encompass acts in relation to Real-World Things and Events.

The majority of the definitions in the preceding sub-sections are already sufficiently generic that they require no change to accommodate this expansion of scope. This sub-section proposes augmented definitions for the four terms that need refinements.

Authorization means a process whereby an Authorization Authority decides whether or not to declare that an Actor has one or more Permissions in relation to a particular IS Resource [ INSERT or Real-World Thing or Event ]. A Permission may be specific to an Actor, or the Actor may be assigned to a previously-defined Role and inherit Permissions associated with that Role

Authorization Authority means an Entity with legal or practical power (de juré or de facto) to determine whether and what Permissions a particular Actor has in relation to a particular IS Resource [ INSERT or Real-World Thing or Event ]

Actor means any Real-World [ INSERT Physical or Virtual ] Thing capable of action on an IS Resource [ INSERT or Real-World Thing or Event ], including humans and some categories of artefact

Permission means an entitlement, or legal or practical (de juré or de facto) authority, to be provided with the capability to perform a specified act in relation to a specified IS Resource [ INSERT or Real-World Thing or Event ]

A specified act may take the form of:

[ INSERT

This section has applied and extended the Pragmatic Metatheoretic Model (PMM) and the Generic Theory of Authentication (GTA), in order to express a Generic Theory of Authorization (GTAz) intended to assist in IS practice and practice-oriented IS research.

This section applies GTAz as a lens through which to observe conventional approaches to authorization, and IdM more generally, and identify implications of the GTAz for theory and practice.

Considerable similarities exist among conventional theories and practices, as summarised in the earlier section; but no single body of theory dominates the field, and no single Identity Management product or service dominates the market. The approach adopted here is to consider the rendition of theory in section 2 as being representative, and to illustrate points made below by reference to the following main sources, listed in chronological order of publication:

The review that was undertaken of conventional approaches from the viewpoint of the GTAz identified many, diverse issues. It proved necessary to devise a classification scheme, distinguishing:

Depictions of conventional authorization theory exhibit many variants and inconsistencies in architecture, process flow, terminology and definitions. Even at the most abstract level, there are considerable differences in interpretations of the notions of identity management and access control. The descriptions of identification, authentication and authorization functions evidence many overlaps. Examples of the conflation of the identification and authentication processes are "An authentication process consists of two basic steps: Identification step: Presenting the claimed attribute value (e.g., a user identifier) [and] Verification step ..." (IETF Glossary p.27) and "The process of identification applies verification to claimed or observed attributes" (ISO24760-1, p.3). The NIST model involves different terms and different conflations of functions. The preliminary phase is referred to as Enrollment and Identity Proofing, and the second phase is called Digital Authentication, with little clarification of the phases' sub-structure (pp.10-12).

Moreover, authorization is sometimes described in ways that suggest it occurs at the time a user is provided with a session in which they can exercise their permissions; whereas its primary usage has to do with a preparatory act: the making of a decision about what permissions a user is to be granted, resulting in an IS Resource being created for future usage during the operational phase.

This unstable base results in very different renditions among Standards, text-books, the various models presented by consultancy firms, various third-party products and services, and the practices of various individual organisations. Josang's proposed Phase Model in Figure 2 was an endeavour to disentangle and rationalise the structure. The GTAz model presented in Figure 6 has taken that proposal further, by separating the phases and steps, applying intuitive terms to them, defining the terms, clarifying the categories of data necessary to enable the operational steps, and generally bringing order to the field.

The distinction between an Entity, which models a Physical Thing, and an Identity, modelling a Virtual Thing, can be found in conventional theory and practice. However, even schemes that recognise there is a difference between them fail to express it clearly and/or fail to cater for that difference in their designs. IETF defines identification as "an act or process that presents an identifier to a system so that the system can recognize a system entity and distinguish it from other entities" (p.145, emphases added). The 2007 definition is unchanged from that of 2000, confirming that the inadequate language has been embedded in theory and practice since its inception.

The Tutorial of Hovav & Berger (2009) sows similar seeds of confusion. It relies on the 'what you know / carry / are' notion of "identifying tools", and hence encompasses, but also merges, the notions of Entity and Identity. Moreover, the term identity is used throughout as though it were rationed to one per person, e.g. "The ability to map one's digital identity to a physical identity" (p.532). Their failure to model Real-World Things in a suitable manner leads them to a notion they call 'partial identities', "each containing some of the attributes of the complete identity" (p.534), to which they apply the term 'pseudonym'. This entangles multiple concepts and delivers both linguistic and conceptual confusion. Entities and identities need to be teased apart, each with one or more (id)entifiers to distinguish (id)entity instances. An entity must be able to map to multiple identities, e.g. a human who acts using the identity of prison warder / spy / protected witness and parent / football coach, each of which must be kept distinct. In addition, an identity must be able to map to multiple entities, e.g. the identity Company Director applies to multiple individual people. The language the Tutorial uses fails those tests. The Tutorial's lingual tangles lead to the odd outcome that, for those authors, IdM refers not to identity management, but to "managing partial identities and pseudonyms" (p.534). The authors had themselves become sufficiently confused that they lost track of the distinction between 'identity' and 'identifier': "a digital identity may be an e-mail address or a user name" (p.534).

This mis-modelling reflects the authors' subscription to Cameron's 'Laws of Identity' (2005). These 'Laws' were an enabler of identity consolidation in support of the needs of Cameron's employer during the period 1999-2019, Microsoft. Cameron's limited conception of identity was devised in such a way that individuals are precluded from having identities that are independent of one another. All identities are capable of being consolidated. The insertion of some malleable 'Chinese walls' does nothing more than provide an impression of privacy protections.

The leading documents in the area during the decade 2000-2010 created a vast array of misunderstandings, and resulted in a considerable diversity of IdM theory and practice that ill-fitted organisational needs. On the other hand, a decade later, it is reasonable to expect that a revised version of an international Standard would provide a much clearer view of the concepts, and workable terminology and definitions. ISO 24760-1, however, even after a 2019 revision, defines identification as "process of recognizing an entity" (p.1, emphases added), and verification as "process of establishing that identity information ... associated with a particular entity ... is correct" (p.3, emphases added). This is despite the document having earlier distinguished 'entity' (albeit somewhat confusingly, as "item relevant for the purpose of operation of a domain [or context] that has recognizably distinct existence") from 'identity' ("set of attributes ... related to an entity ..."). Even stranger is the fact that, having defined 'identifier' as "attribute or set of attributes ... that uniquely characterizes an identity ... in a domain [or context]", the ISO document defines 'identification' without reference to 'identifier' (all quotations from p.1).

On this unhelpful foundation, the Standard builds further confusions. Despite defining the term 'evidence of identity', the document fails to refer to it when it defines credential, which is said to be "representation of an identity ... for use in authentication ... A credential can be a username, username with a password, a PIN, a smartcard, a token, a fingerprint, a passport, etc." (p.4). This muddles all of evidence, entity, identity, attribute, identifier and entifier, and omits any sense of a credential being evidence of high reliability, having being issued or warranted by an authority. The confusion is further illustrated by the definition of identity proofing as involving "a verification of provided identity information and can include uniqueness checks, possibly based on biometric techniques" (p.5, emphases added). A biometric cuts through all of a person's identities, by providing evidence concerning the underlying entity.

The NIST documents, meanwhile, appear not to recognise any difference between entity and identity, with the only uses of 'entity' referring to legal or organisational entities rather than applicants / claimants / subscribers / users. It also refers to the "classic paradigm" for authentication factors (what you know/have/are), without consideration of the substantial difference involved in "what you are", and without distinguishing humans from active artefacts (NIST-800-63-3 2017, p.12). It also blurs the (id)entity notions when discussing biometrics: "Biometric characteristics are unique personal attributes that can be used to verify the identity of a person who is physically present at the point of verification" (pp.13-14, emphases added).

A further source of relevance is FIPS-201-3 (2022), the US government's Standard for Personal Identity Verification. It defines identification as "The process of discovering the identity (i.e., origin or initial history) of a person or item from the entire collection of similar persons or items" (p.98), and an identifier as "Unique data used to represent a person's identity and associated attributes. A name or a card number are examples of identifiers" (p.98). This appears to exclude biometrics, and admit of multiple identities for an entity (consistently with GTAz), but further deepens the internal inconsistencies and confusions among Standards documents. Further, it defines identity as "The set of physical and behavioral characteristics by which an individual is uniquely recognizable" (FIPS-201-3, p.98). Firstly, this is a real-world definition, as distinct from the abstract-world notion of attributes used by most sources. Secondly, it is about a Physical Thing, which is represented by an Abstract-World Entity; but it refers to it as an "identity", which properly or at least more usefully represents a Virtual Thing. To extend a much-used aphorism, 'the great thing about Standards definitions is that there are so many to choose from'. On the positive side, FIPS-201-3 defines authentication as "The process of establishing confidence of authenticity; in this case, the validity of a person's identity and an authenticator" (p.94, emphasis added), which reflects the practical, complex, socio-technical reality, rather than the chimera of accessible truth.

Beyond the basic definitions, conventional theory mis-handles relationship cardinality. Entities generally have multiple Identities, and Identities may be performed by multiple Entities, both serially and simultaneously. Consistently with that view, ISO 24760-1 expressly states that "An entity can have more than one identity" and "Several entities can have the same identity" (p.1, see also pp.8-9). Yet it fails to reflect those statements in the remainder of the document. Any Authorization scheme built on a model that fails to support those realities is doomed to deliver confusions and evidence errors and insecurities. A common example is the practice of parents sending their young children to an automated teller machine or EFTPOS terminal with the parent's payment-card and PIN. Another is the practice of aged parents depending on their grown-up children to perform their Internet Banking tasks. Similarly, in many organisations, employees share loginids in order to save time switching between users. An enterprise model is inadequate if it does not encompass these common activities. It is also essential that there be a basis for distinguishing authorised delegations that breach terms of contract with the card-issuer, or organisational policies and procedures, on the one hand, and criminal behaviour on the other.

A further concern is the widespread lack of appreciation evident in the Standards of the high degree of intrusiveness involved in biometric entification of human entities. Data collection involves submission by the individual to an authority. Such procedures may be ethically justifiable in the context of law enforcement, but imposition of them on employees, visitors to corporate sites, customers and clients is an exercise of corporate or government agency power. People subjected to demeaning procedures experience distaste, tension, resentment and disloyalty, resulting in behaviour ranging from repressed and sullen resistance to overt and even violent opposition. Biometric intrusiveness is avoided by choosing to focus on identities rather than entities.

In recent decades, organisations have become heavily dependent on the management of relationships through the Digital Persona, as evidenced by the platform business model (Moore & Tambini 2018), the digital surveillance economy (Clarke 2019), and digitalisation generally (Brennen & Kreis 2016). This results in an increase in the already substantial social distance between individuals and the organisations that they deal with. It brings with it consolidation of an individual's many identities into a data-intensive, composite Digital Persona, imposed by an organisation, and intrusive into the person's life, behaviour and scope for self-determination. Far from generating trust, these architectures stimulate distrust.

Yet another problem with the conventional model is a failure to accommodate nymity, where the entity underlying an identity is not knowable (anonymity), or is in principle knowable but is in practice at least at the time not known (pseudonymity). Hovav & Berger (2009) acknowledge, discuss and define anonymity and pseudonymity (p.533), citing Pfitzmann & Hansen (2006), and recognise the need for pseudonymity to be protected, but capable of being compromised under some conditions: "Identity brokers reveal the linkage when and if necessary" (p.533). The ISO standard, on the other hand, defines a pseudonym as "identifier ... that contains the minimal identity information ... sufficient to allow a verifier ... to establish it as a link to a known identity ..." (p.7). The purposeful inclusion of any identity information in an identifier is in direct conflict with (and is most likely intended to undermine) the established notion of a pseudonym as an identifier that cannot be associated with an underlying entity without overcoming legal, organisational and technical constraints.

There are many circumstances in which authentication is unnecessary, impractical, too expensive, or unacceptable to the entities involved. For example, the creation of accounts at many service-providers involves little or no authentication. The identity is just 'an identity', and any reliance that any remote computer, person or organisation places on it depends on subsequent authentication activities. One reason for this is that unauthenticated identities are entirely adequate for a variety of purposes, and they are inexpensive and quick for both parties. In addition, nymity is positively beneficial in some circumstances, such as obligation-free advice, online counselling, whistle-blowing and the surreptitious delivery of military and criminal intelligence.

The GTAz presented in this paper avoids or manages all of the problems identified in this section. It thereby provides insights into how products and services developed using conventional approaches might be adapted to overcome those problems.

Multiple other aspects of conventional definitions are unhelpful to IS practitioners. For example, Hovav & Berger (2009)'s notion of credential at first appears to be consistent with GTA usage, quoting the Wikipedia definition: "A credential is an attestation of qualification, competence, or authority issued to an individual by a third party with a relevant de jure or de facto authority or assumed competence to do so" (p.542). However the subsequent text backtracks, talking of unauthenticated assertions and applying to them the strange term 'raw credentials'. So the Tutorial undermines the clarity of the Wikipedia entry it nominally adopted. Similarly, the IETF definition of credential is "A data object that is a portable representation of the association between an identifier and a unit of authentication information" (RFC4949 2007, p.84). This leaves no space for a more generic notion of evidence, and fails to convey the notion of issuance or warranty by an authority. In similar vein, the NIST document limits its use of credential solely to electronic means of associating an authenticator with a user (NIST-800-63-3 2017, p.18) -- and using the unrealistically strong term 'binding', presumably in an endeavour to imply truth and hence irrefutability.

Further confusions are invited by the IETF definitions of of user, system entity, system user and end user. The Generic Theory presented in this paper includes terms and definitions that accord with IS practice, distinguishing an End User, whose interests relate to the application, from a System User, concerned with system administration. Another terminological inconsistency arises in that the NIST model refers to an (Id)Entity as an Applicant during the preliminary phase, and a Claimant, then Subscriber, during the operational phase (p.10).

A further source of confusion is the use of the word 'authorization' not only for a process, but also for the result of that process, e.g. IETF defines authorization as "an approval that is granted to a system entity to access a system resource. (Compare: permission, privilege)" (p.29), and permission as "Synonym for 'authorization'. (Compare: privilege)" and "An authorization or set of authorizations to perform security-relevant functions in the context of role-based access control" (p.220). This is similar to the informal usage of 'identification' to refer to a document or other evidence of identity (as in the oft-heard "I'll need to see some identification"). That particular ambiguity is not evident in the ISO Standard, but it does not deprecate its use. The GTAz, in contrast, seeks to provide clarity in relation to all relevant concepts and terms.

Implicit in almost all renditions of conventional identity management theory is the idea that not only does a singular truth exist in relation to assertions involving (Id)Entity, but also that that truth is accessible, both in principle and as a matter of practice in vast numbers of assertion authentication activities in highly diverse environments. This is evidenced by consistent use of absolute terms such as 'verify', 'proof' and 'correct'. For example, the IETF Security Glossary defines authenticate to mean "verify (i.e., establish the truth of) an attribute value claimed by or for a system entity or system resource" (2000, p.15, unchanged in 2007, p.26, emphasis added). Hence authentication is the process of verifying a claim. The problem is also inherent in ISO24760-1, where "verification" is defined as "process of establishing that identity information ... associated with a particular entity ... is correct" and "authentication is defined as "formalized process of verification ... that, if successful, results in an authenticated identity ... for an entity"(p.3, emphases added).

Truth/Verification/Proof/Validation notions are applicable within tightly-defined mathematical models, but not in the Real World in which Identity Management is applied, whose complexities are such that socio-technical perspectives are essential to understanding, and to effective analysis and design of IS. This appears to be acknowledged by the ISO Standard, in its observation about authentication that it involves tests "to determine, with the required level of assurance, their correctness" (p.3, emphasis added). A qualification to an absolute term like 'correctness' is incongruous; but that does not appear to have been noticed by the Standard's authors, reviewers or approving bodies. It is unclear what practitioners who consult the Standard make of such inconsistencies. At the very least, the ambiguities appear likely to sow seeds of doubt, and cause confusions.

The Generic Theory avoids the conventional approach's assumptions about truth, and instead reflects real-world complexities and uncertainties by building the definitions around the degree of confidence in the reliability of assertions.

RBAC was conceived at a time when most IS operated inside organisational boundaries. On the other hand, the notions of inter- and multi-organisational systems were already strongly in evidence, and extra-organisational systems extending out to individuals have been operational since c.1980 (Clarke 1992a). Yet the Glossary of 2000 revised in 2007 defines role to mean "a job function or employment position" (IETF, p.254), ISO provides no definition, but the context suggests a similarly narrow intent.

The NIST exposition on ABAC makes the remarkable statement that "a role has no meaning unless it is defined within the context of an organization" (NIST800-162 2014, p.26). Further, although the document suggests that ABAC supports "arbitrary attributes of the user and arbitrary attributes of the [IS resource]" (p.vii), the only examples provided for actor-attributes in the entire 50-page document are position descriptions internal to an organisation: "a Nurse Practitioner in the Cardiology Department" (p.viii, 10), "Non-Medical Support Staff" (p.10). The GTAz presented here makes clear that Roles, and associated Identities, are relative to an IS, and are not limited to organisational positions. To the extent that Attributes rather than Roles are used as the organising concept, the same holds for Attributes.

An implicit assumption appears to be that a one-to-one relationship exists between Organisational Identity and Organisational Role. On the other hand although an Identity is likely to have a primary Organisational Role, they commonly have additional Roles, e.g. as a Fire Warden, a mentor to a junior Assistant, and a member of an interview panel. Particular Permissions are needed for each Role (e.g. for access to messages intended only for Fire Wardens, and for access to the personal details of job-applicants).

A common weakness in schemes is inadequate attention to the granularity of data and processes. Many Permissions are provided at too gross a level, with entire records accessible, well in excess of the data justified on the basis of the need-to-know principle. Excessive scope of Permissions invites abuse, in the form of appropriation of data, and performance of functions, for purposes other than those for which the Permissions were intended. This may be done out of self-interest (commonly, curiosity, electronic stalking of celebrities, or the identification and location of individuals), as a favour for friends, or for-fee. The threats of insider attack and data breach are inadequately controlled by RBAC. Nor do the Standards acknowledge the need for mitigation measures. RBAC's lack of controls invites insider abuse. This is hardly a new insight. See, for example, Clarke (1992b).

A further concern arises with implied powers. "The RBAC object oriented model (Sandhu 1996) organises roles in a set/subset hierarchy where a senior role inherits access permissions from more junior roles" (p.135). Permission inheritance is a serious weakness. A superior does not normally have the need, and hence should not normally have the permission. Access to such data is necessary for the performance of review and audit, but not for the performance of supervision.

More generally, RBAC approaches are unable to accommodate task as a determinant of Permission. It is even uncommon to see a proxy approach adopted. A soft approach would be to require each User to provide a brief declaration of the reason for each exercise of a Permission. In many IS, this need be no more than a Case-Number, Email-Id, or other reference-number to a formal organisational register. Once that declaration is logged, along with the Username, Date-Time-Stamp, Record(s) accessed and Process(es) performed, a sufficient audit trail exists. That, plus the understanding that log-analysis is undertaken, anomalies are investigated, and sanctions for misuse exist and are applied, acts as a substantial brake on insiders abusing their privileges, and as an enabler of ex post facto investigation and corrective action.

Conventional approaches start not with an open view, but with a fixation on (Id)Entity assertions. The pre-authentication, authentication and authorization of (Id)Entity Assertsions are resource-intensive, may be challenging, are likely to be intrusive, and hence are expensive. They are also likely to be the subject of countermeasures by disaffected users. From an efficiency perspective, there would be advantages in determining what Assertions need to be authenticated in order to satisfy the organisation's needs, and then devising a cost-effective strategy to manage the risks the organisation faces.

Excessively expensive, cumbersome and intrusive designs also have negative impacts on effectiveness. Exceptions encourage informal flexing of procedures, and workarounds arise, to speed up processes and enable organisations' core functions to be performed. Some of the exceptions are likely to involve individuals who resist the dominance of enterprise interests and the ignoring of individuals' interests. These are inherent in processes that involve intensive use of personal data, and especially the imposition of biometric measures. Users generally, and particularly those who are external to the organisation are likely to be attracted to user-friendly architectures, standards and services, including Privacy-Enhancing Tools (PETs) that are oriented towards obfuscation and falsification. This development is likely to compromise the effectiveness of organisations' preferred identity management techniques (Clarke 2004).

A further consideration is that the data-collection arising from the operation of conventional IdM has the attributes of both a data asset and a data liability: The organisation may perceive potential business value through the re-purposing of the data; but it is also attractive to parties outside the organisation. The first risks non-compliance with data protection laws. The second gives rise to the need for investment in safeguards, for data generally, but especially for authenticators. Recent years have seen much more targeted attacks seeking, for example, credit-card details, and quality images of drivers' licences (Poehn & Hommel 2022).

This section has identified weaknesses of conventional approaches to Identity Management (IdM) that are addresed by the IdEM framework presented in this paper. As a critical element within IdEM, the Generic Theory of Authorization (GTAz) enables the muddiness of thinking in existing theory to be identified and explained, avoids or resolves those confusions, and guides designers away from inappropriate designs and towards more suitable approaches to the field.

This article has proposed a Generic Theory of Authorization (GTAz) that is based on a pragmatic meta-theoretic model and a Generic Theory of Authentication (GTA), that is comprehensive and cohesive, and that takes into account the key features of prior models and theories in the area. In order to demonstrate the efficacy of the model, a review was undertaken of one of the reference documents that has been a significant influence for industry practice, through the lens of GTA/GTAz.

The document selected for close attention was NIST800-63-3 (2017). This provides guidelines covering what it calls "identity proofing and authentication of users (such as employees, contractors, or private individuals) interacting with government IT systems over open networks" (p.iii). The document is addressed to US government agencies. It has been influential on government agencies, business enterprises and guideline authors worldwide, and has in excess of 400 Google citations. The 2017 edition built on and superseded editions of 2011 and 2013. A further edition has been in development for several years; but, even when published, it will take some years before it materially influences the design and use of the large numbers of identity management application products and services.

As indicated by their titles and descriptions, and the diagrammatic representations in Figures 2 and 5, the two models exhibit differences in purpose and scope. NIST's is concerned solely with digital rather than physical contexts, GTAz with both. NIST's scope is defined as identification and authentication and setting the frame within which authorization and access control are performed, whereas GTAz, built over GTA, extends across the whole undertaking. Although NIST's influence is broad, its primary target audience is US government agencies. However, contrary to NIST's indicative limitation to authentication of human users, both encompass both humans and artefacts. These, and other more specific factors, needed to be taken into account in undertaking the analysis.

The remainder of this section identifies aspects of the NIST model that, it is contended, contain what might be variously regarded as flaws, weaknesses or ambiguities, which are capable of being overcome by adoption of the GTA/GTAz models and terminology.

The aspects are addressed in six sub-sections, the first three concerned with conceptual matters, and the last three with a process view of the undertaking.

The term 'meta-theoretic assumptions' (or in some cases 'commitments') refers to the metaphysical or philosophical bases on which an analysis is grounded (Cuellar 2020). These are usefully categorised within the fields of ontology (concerned with existence), epistemology (concerned with knowledge), axiology (concerned with value) and methodology (concerned with processes).

One particular epistomological assumption that pervades NIST (2017) is that of humanly-accessible truth. Throughout the document, notions such as 'proofing', 'verification' and 'determination of validity' predominate. (For example, the string 'verif' occurs more than 100 times in the document's 60pp.). These truth-related notions are particularly prominent in relation to the process of identification. The implications is that something approaching infallibility is achievable.

Some more circumspect expressions are used primarily in relation to the authentication process, such as 'reasonable risk based assurance' (p.2), and 'levels of assurance' and 'establishing confidence in' (in the definition of Digital Authentication on p.45). However, the definition of Authentication uses the truth-related term "verifying the identity of ..." (p.41), and a Credential is declared as having a mandatory characteristic that it "authoritatively binds an identity ... to [an] authenticator" (p.44). Unbreakable association may be achievable with artefacts; but the only way to implement it with humans is to reduce the human to an artefact, e.g. through chip-implantation. A more appropriate statement would be that 'a credential provides a considerable degree of confidence in an assertion, because it entails a combination of an assurance from a third party in which reliance is placed, together with a technical design that reliably associates the assertion with the party that is being authenticated'.

The inconvenient realities that need to be confronted in practice are that infallibility is unachievable, and high levels of confidence are resource-intensive and inconvenient for all parties -- and even then are often unachievable, particularly where some parties have an interest in ensuring that the process is of low quality.

A problem that unrealistic definitions gives rise to is that practitioners have no option but to compromise, if not simply breach, the recommendations. A more useful approach is to encourage practitioners to apply their business judgement in order to apply qualified recommendations to achieve an appropriate balance among multiple objectives. The GTAz expressly couches the definition of Authentication in terms of establishing a degree of confidence in the reliability of an (id)entity assertion. The framing of NIST's model and guidance would be far less ambiguous if it adopted that approach.

The NIST model appears to be inconsistent in its ontological assumptions, or perhaps wavers between a 'materialist' assumption (that phenomena exist in a real world) and an 'idealist' alternative (that everything exists in the human mind).

The GTAz is based on express metatheoretic assumptions that adopt a truce between the two assumptions, whereby a real world of phenomena is postulated to exist, on one plane, which humans cannot directly know or capture, but which humans can sense and measure, such that they can construct a manipulable abstract-world model of those phenomena, on a plane distinct from that of the real world. GTAz then declares terminology that expressly distinguishes whether each particular term refers to the real or the abstract world. The real world features things and events with properties. In the abstract world, the conceptual-model level comprises entities, identities, transactions and relationships among them, and the data-model level features data-items, records, identifiers and entifiers.

One source of confusion in NIST (2017) is the absence of clarity about whether 'identity' is a real-world phenomenon or an abstract-world model-element. For example, the notion of "the subjectÅfs real-life identity" (p.iv) collides with the definition of 'identity' as the abstract-world notion of "an attribute or set of attributes of a subject" (p.47).

Another inconsistency that invites misinterpretations appears in the definitions of the general term 'authentication' ("verifying the identity of a user, process, or device ...", p.41) and the specific term 'digital authentication' ("the process of establishing confidence in user identities presented digitally to a system", p.45). One uses a truth-related word, and the other a practical and relativistic term. One encompasses both humans and artefacts, whereas the other refers only to users, which by implication means only human users. This not just a minor editorial flaw, because the word 'authentication' appears 219 times in the document. The specific term accounts for 21 of them, but from the various contexts it appears likely that many are actually intended to invoke the more specific concept.

Another issue is that, whereas applicant and claimant are both defined by NIST as being categories of the defined term 'subject', subscriber is defined as being a category of the undefined term 'party'. Minor though such an inconsistency may seem, it creates uncertainty and ambiguity. At a similar level, the previous editions' conflation of authenticators and tokens has been removed, but no definition is provided of the useful meaning of the term 'token'. It is defined in GTAz as "a recording medium on which useful Data is stored, such as one or more (Id)Entifiers, Authenticators and/or Credentials". Another issue is ambiguity in the use of the expression "bound to", which appears to refer to binding between four different pairs of concepts, in the definition on p.43 and the passages on pp.10 and 14.

Examples used in NIST's text, relating to low-risk business processes such as surveys (pp.20-21) represent recognition of the validity of authenticating assertions as to fact, without necessarily authenticating an (id)entity assertion. However, NIST's model does not extend to assertions generally, and hence the opportunity is missed to draw readers towards a broader conception of what assertions it is that the effectiveness of each business process actually depends upon.

The terminology used in expressing the GTA and GTAz models was devised with the intention of achieving completeness, consistency and coherence. If a similarly careful approach is adopted to a revision of the NIST model and guidance, many interpretation difficulties will be surfaced and addressed, and the next revision would deliver far greater clarity and invite far fewer misunderstandings.