Roger Clarke's Web-Site

© Xamax Consultancy Pty Ltd, 1995-2024

Infrastructure

& Privacy

Matilda

Roger Clarke's Web-Site© Xamax Consultancy Pty Ltd, 1995-2024 |

|

|||||

| HOME | eBusiness |

Information Infrastructure |

Dataveillance & Privacy |

Identity Matters | Other Topics | |

| What's New |

Waltzing Matilda | Advanced Site-Search | ||||

Review Version of 24 August 2022

© Xamax Consultancy Pty Ltd, 2022

Available under an AEShareNet ![]() licence or a Creative

Commons

licence or a Creative

Commons  licence.

licence.

This document is at http://rogerclarke.com/EC/AITS.html

The original conception of artificial intelligence (old-AI) was as a simulation of human intelligence. That has proven to be an ill-judged quest. In addition to leading too many researchers repetitively down too many blind alleys, the idea embodies far too many threats to individuals, societies and economies. To increase value and reduce harm, it is necessary to redefine the field. A review of the original conception, operational definitions and important examples points to a family of replacement ideas: artefact intelligence, complementary artefact intelligence (CAI), and augmented intelligence (new-AI). However, combining intellect with action leads to broader conceptions of far greater value: complementary artefact capability (CAC) and augmented capability (AC).

As a theme for a research project in the mid-1950s, 'Artificial Intelligence' (AI) was a brilliant idea. As a unifying concept for a field of endeavour, on the other hand, it has always been unsatisfactory. For decades, delivery has fallen short of promises, resulting in disgruntled investors and 'AI winters'. The recent phase of unbridled and insufficiently sceptical conception, development and application of AI systems is coupling overblown expectations with thoughtless and harmful collateral damage to people caught up in the maelstrom. The author's contention is that the root cause of the problems is mis-conception of both the need and the opportunity, resulting in mis-targeted research, and mis-shapen applications.

This article proposes alternative conceptions that have the capacity to bring technologies back under control, greatly reduce their potential for harm, and create new opportunities for constructive designs that can benefit society, the economy, and investors. The argument is pursued in several complementary ways. The article commences by revisiting the original conception of AI, and the notion's drift over time. This is followed by a review of various instantiations of systems within which AI has been embodied, and an outline of how the shift from procedural programming languages, via rule-based systems, to forms of machine-learning, has weakened decision-rationale to the point of extinction.

After acknowledging the potentials, attention is turned to the generic threats that AI embodies, and the prospects of seriously negative impacts, on individuals, on the economy, on societies, and hence on organisations. Unsurprisingly, this is giving rise to a great deal of public concern. Regrettably, the reaction of AI proponents and government policy agencies has not been to address the problem and impose legal safeguards, but instead to mount charm offensives and propose complex but ultimately vacuous 'ethical guidelines' and 'soft law'.

Building on that view of 'old-AI', Alternative conceptions are outlined. The first, dubbed here 'new-AI', has a similar field of view, but avoids the existing and critical mis-orientation. The second conception, referred to as 'AC' is broader in scope, and represents a more comprehensive approach to the utilisation of technologies.

This paper combines existing knowledge into an integrated whole. The article's contributions are the provision of a clear rationale for change, combined with a cohesive framework to replace old-AI with new-AI and AC. The intention is to present a somewhat sweeping proposition within the constraints of a journal-article of modest length and hence with economy in the threads of history and technology that are pursued, examples that are proferred, and citations that are provided.

At the time the idea was launched, the AI notion was based on

"the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it" (McCarthy et al. 1955)

"The hypothesis is that a physical symbol system [of a particular kind] has the necessary and sufficient means for general intelligent action" (Simon - variants in 1958, 1969, 1975 and 1996, p.23)

The reference-point was human intelligence, and the intention was to create artificial forms of human intelligence using a [computing] machine.

In a surprisingly short time, however, the 'conjecture' developed into a fervid belief, e.g.:

"Within the very near future - much less than twenty-five years - we shall have the technical capability of substituting machines for any and all human functions in organisations. ... Duplicating the problem-solving and information-handling capabilities of the brain is not far off; it would be surprising if it were not accomplished within the next decade" (Simon 1960)

Herbert Simon (1916-2001) ceased pursuing such propositions over two decades ago; but successor evangelists have kept the belief alive:

"By the end of the 2020s [computers will have] intelligence indistinguishable to biological humans" (Kurzweil 2005, p.25)

So virile is the AI meme that it has been attempting to take over the entire family of information, communication and engineering technologies. Some people are now using the term for any kind of software, developed using any kind of technique, and running in any kind of device. There has also been some blurring of the distinction between AI software and the computer(s) or computer-enhanced device(s) in which it is installed.

Yet AI has had an unhappy existence. It has blown hot and cold through summers and winters, as believers have multiplied, funders have provided resources, funders have become disappointed by the very limited extent to which AI's proponents delivered on their promises, and many believers have faded away again. One major embarrassment was the failure of Japan's much-vaunted 'Fifth Generation Project', a decade-long failure that commenced in 1982 (Pollack 1992).

Many histories have been written, from multiple viewpoints (e.g. Russell & Norvig 2009, pp. 16-28, Boden 2016). This article adopts an abbreviated view of the bifurcation of the field. The original conception represented a 'grand challenge'. Many AI practitioners have divorced themselves from that challenge. They refer to the original notion as 'artificial general intelligence' or 'strong AI', which 'aspires to' replicate human intelligence. They adopt the alternative stance that their work is 'inspired by' human intelligence.

The last decade has seen a prolonged summer for AI technologists and promoters, who have attracted many investors. The author contends that the current euphoria about AI is on the wane, and another hard winter lies ahead. With so much enthusiasm in evidence, and so many bold predictions about the promise of AI, such scepticism is unconventional, and needs to be justified. The first step in doing so is to review some of the many, tangled meanings that people attribute to the term Artificial Intelligence.

People working in AI have had successes. An important example is techniques for pattern recognition in a wide variety of contexts, including in aural signals representing sound, and images representing visual phenomena. Applications of these techniques have involved the engineering of products, leading to more grounded explanations of how to judge whether an artefact is or is not a form of AI.

The following is an interpretation of many attempts to express an operational definition of AI. It is a paraphrase of multiple sources (including Albus 1991, Russell & Norvig 2003 and McCarthy 2007) and not a direct quotation from any single source:

Intelligence is exhibited by an artefact if it:

(1) evidences (a) perception, and (b) cognition, of relevant aspects of its environment;

(2) has goals; and

(3) formulates actions towards the achievement of those goals;

but also (for some commentators at least):

(4) implements those actions.

A complementary approach to understanding what AI is and does, and what it is not and does not do, is to consider the kinds of artefacts that have been used in an endeavour to deliver it. The obvious category of artefact is computers. The notion goes back to the industrial era, in the first half of the 19th century, with Charles Babbage as engineer and Ada Lovelace as the world's first programmer (Fuegi & Francis 2003). The explosion in innovation associated with electronic digital computers commenced about 1940 and is still continuing.

Computers were intended for computation. However, capabilities necessary to support computation are capable of supporting processes that have other kinds of objectives. A program is generally written with a purpose in mind, thereby providing the artefact with something resembling goals - attribute (2) of the operational definition. They can be applied to assist in the 'formulation of actions' - attribute (3) of the operational definition. They can even be used to achieve a kind of 'cognition', if only in the limited sense of categorisation based on pattern-similarity - attribute (1b). Adding 'sensors' that gather or create data representing the device's surroundings provides at least a primitive form of 'perception' of the real world - attribute (1a). So it is not difficult to contrive a simple demonstration of uses of a computer that satisfy the shorter version of the operational definition of AI.

A computer can be extended by means of 'actuators' of various kinds to provide it with the capacity to act on the real world. The resulting artefact is both 'a computer that does' and 'a machine that computes', and hence what is commonly called a 'robot'. The term was invented for a play (Capek 1923), and has been much used in science fiction, particularly by Isaac Asimov during the period 1940-85 (Asimov 1968, 1985). Industrial applications became increasingly effective in structured contexts from about 1960. Drones are flying robots, and submersible drones are swimming robots.

Other categories of artefact can now run software that might satisfy the definition of AI. These include everyday things, such as bus-stops. These now feature display panels that impact people in their vicinity, projecting images selected or devised to sell something to someone. Their sensors may be cameras to detect and analyse faces, or detectors of personal devices that enable access to data about the device-carriers' attributes.

Another category is vehicles. The author's car, a 1994 BMW M3, was an early instance of a vehicle whose fuel input, braking and suspension were supported by an electronic control unit. A quarter-century later, vehicles have far more layers of automation intruding into the driving experience. And of course driverless vehicles depend on software performing much more abstract functions than adapting fuel mix.

For many years, sci-fi novels and feature films have used as a stock character a robot that is designed to resemble humans, referred to as a humanoid. From time to time, it is re-discovered that greater rapport is achieved between a human and a device if the device evidences feminine characteristics. Whereas the notion 'humanoid' is gender-neutral, 'android' (male) and 'gynoid' (female) are gender-specific. The English translation of Capek's play used 'robotess' and more recent entertainments have popularised 'fembot'. This notion was central to an early and much-celebrated AI, called Eliza (Weizenbaum 1966). Household-chore robots, and the voices in digital assistants in the home and car, commonly embody gynoid attributes. Feature films such as 'Her' (2013) and 'Ex Machina' (2014) challenge assumptions about the nature of humanity, and of gender.

A promising, and challenging, category of relevance is cyborgs - that is to say, humans whose abilities are augmented with some kind of artefact, as simple as a walking-stick, or with simple electronics such as a health-condition alert mechanism, or a heart pacemaker. As more powerful and sophisticated computing facilities and software are installed, the prospect exists of AI integrated into a human, and guiding or even directing the human's effectors (Clarke 2005, 2011).

Various such real-world examples can be readily argued to satisfy elements of the definition of AI. Particularly strong cases exist for industrial robots, and for driverless vehicles on rails, in dedicated bus-lanes and in mines. In the case of Mars buggies, the justification is not just economic but also functional. The signal-latency in Earth-with-Mars communications (about 20 minutes) precludes effective operation by an Earth-bound 'driver'. Such examples pass the test, because there is evidence of:

However, very charitable interpretation is needed to to detect evidence of the second-order intellectual capacity that we associate with human intelligence, such as:

Another approach to appreciating the nature of AI is to consider the different ways in which the intellectual aspects of artefact behaviour are brought into being. During the first half-century of the information technology era, software was mostly developed using procedural or imperative languages. These involve the expression of a solution, which in turn requires a clear understanding (if not an explicit definition) of a problem (Clarke 1991). This approach uses genuinely-algorithmic languages, using which a program comprises a sequence of well-defined instructions, including selection and iteration constructs.

Research within the AI field has variously delivered and co-opted a variety of software development techniques. One of particular relevance is rule-based 'expert systems'. Importantly, a rule-set does not embody, and does not even recognise, a problem or a solution. Instead, it defines 'a problem-domain', a space within which cases arise. The idea of a problem is merely a perception by people who are applying some kind of value-system when they observe what goes on in the space.

Another relevant software development technique is AI/ML (machine-learning) and its most widely-discussed form, artificial neural networks (ANN). The original conception of AI referred to learning as a primary feature of (human) intelligence. The ANN approach, however, adopts a narrow focus on data that represents instances. There is not only no sense of problem or solution, but also no model of the problem-domain. There's just data, with meta-data provided or generated from within the data-set. It is implicitly assumed that the world can be satisfactorily understood, and decisions made, and actions taken, on the basis of the similarity of a new case to the 'training set' of cases that have previously been reflected in the software.

Discussions of AI/ML frequently use the term 'algorithmic', as in the expression 'algorithmic bias'. This is misleading, because ANNs in particular are not algorithmic, but rather entirely empirical. ANNs are also not scientific in the sense of providing a coherent, systematic explanation of the behaviour of phenomena. Science requires theory about the world, directed empirical observation, and feedback to correct, refine or replace the theory. AI/ML embodies no theory about the world. The detachment between real-world needs and machine-internal processing, which emerged with rule-based systems, is pursued to completion by AI/ML. The absence of any reliable relationship with the real world underpins many of the issues identified below.

So far, this review has considered the original conception of AI, an operational definition of AI, some key examples of AI embodied in artefacts, and some important AI techniques. Together, these provide a basis for consideration of AI's impacts.

The primary concern of this analysis is with downsides. There is no shortage of wide-eyed optimism about what AI might do for humankind - although many claims are nothing more than vague marketing-speak. The more credible arguments relate to:

AI embodies threats to human interests. There have been many expressions of serious concern about AI, including by theoretical physicist Stephen Hawking (Cellan-Jones 2014), Microsoft billionaire Bill Gates (Mack 2015), and technology entrepreneur Elon Musk (Sulleyman 2017). However, critics seldom make clear quite what they mean by AI, and their concerns tend to be long lists with limited structure; so progress in understanding and addressing the problems has been glacially slow. Some useful sources include Dreyfus 1972, Weizenbaum 1976, Scherer (2016, esp. pp. 362-373), Yampolskiy & Spellchecker (2016), Mueller (2016) and Duursma (2018).

This author associates the negative impacts and implications of AI with 5 key features (Clarke 2019a). The first of these is Artefact Autonomy. We're prepared to delegate to cars and aircraft straightforward, technical decisions about fuel mix and gentle adjustments of flight attitude. On the other hand, we are, and we need to be, much more careful about granting delegations to artefacts in relation to the more challenging categories of decision. Table 1 identifies some key characteristics of challenging decisions, drawing in particular on Weizenbaum (1976) and Dreyfus (1992). Serious problems arise when authority is delegated to an artefact that lacks the capability to draw inferences, make decisions and take actions that are reliable, fair, or 'right', according to any of the stakeholders' value-systems.

_____________________

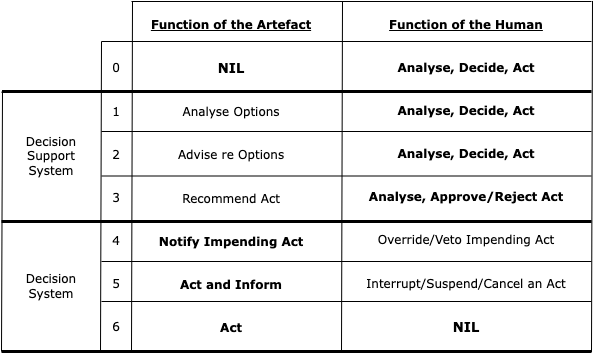

Where at least some autonomy is tenable, a framework is needed within which the degree of autonomy can be discussed and decided. Figure 1 distinguishes 6 levels of autonomy, 3 of which are of the nature of decision systems, and 3 of which are decision support systems. At levels 1-3, the human is in charge, although an artefact might unduly or inappropriately influence the human's decision. At levels 4-5, on the other hand, the artefact is primary, with the human having a window of opportunity to influence the outcomes. At level 6, it is not possible for the human to exercise control over the act performed by the artefact.

The second feature underlying concerns about AI is Inappropriate Assumptions about Data. There are many obvious data quality factors, including accuracy, precision, timeliness, completeness, the general relevance of each data-item, and the specific relevance of the particular content of each data-item (Wang & Strong 1996). Another consideration that is all too easy to overlook is the correspondence of the data with the real-world phenomena that the process assumes it to represent. That depends on appropriate identity association, attribute association and attribute signification (Clarke 2016).

It is common in data analytics generally, and in AI-based data analytics, to draw data from multiple sources. That brings in additional factors that can undermine the appropriateness of inferences arising from the analysis and create serious risks for people affected by the resulting decisions. Inconsistent definitions and quality-levels among the data-sources are seldom considered (Widom 1995). Data scrubbing (or 'cleansing') may be applied; but this is a dark art, and most techniques generate errors in the process of correcting other errors (Mueller & Freytag 2003). Claims are made that, with sufficiently large volumes of data, the impacts of low-quality data, matching errors, and low scrubbing-quality automatically smooth themselves out. This may be a justifiable claim in specific circumstances, but in many cases it is a magical incantation that does not hold up under cross-examination (boyd & Crawford 2012).

Also far too common are Inappropriate Assumptions about the Inferencing Process that is applied to data. Each approach has its own characteristics, and is applicable to some contexts but not others. Despite that, training courses and documentation seldom communicate much information about the dangers inherent in mis-application of each particular technique. One issue is that data analytics practitioners frequently assume quite blindly that the data that they have available is suitable for the particular inferencing process they choose to apply. Data on nominal, ordinal and even cardinal scales is not suitable for the more powerful tools, because they require data on ratio scales. It is a fatal flaw to assume that ordinal data (typically 'most/more/middle/less/least') is ratio-scale data. Mixed-mode data is particularly challenging. Meanwhile, the strategy adopted to deal with missing values is alone sufficient to deliver spurious results; yet the choice is often implicit rather than a rational decision based on a risk assessment. For any significant decision, assurance is needed of the data's suitability for use with each particular inferencing process.

The fourth feature is the Opaqueness of Inferencing Processes. As discussed above, some techniques used in AI are merely empirical, rather than being scientifically-based. Because the AI/ML method of artificial neural networks is not algorithmic, no rationale, in the sense of a procedural or rule-based explanation, can be provided for the inferencese that have been drawn. Inferences from artificial neural networks are a-rational, i.e. not supportable by a logical explanation. Courts, coroners and ombudsmen demand explanations for the actions taken by people and organisations. Unless a decision-process is transparent, the analysis cannot be replicated, the process cannot be subjected to audit by a third party, the errors in the design cannot be even discovered let alone corrected, and guilty parties can escape accountability for harm they cause. The legal principles of natural justice and procedural fairness are crucial to civil behaviour. And they are under serious threat from some forms of AI.

The dilution of accountability is closely associated with the fifth major feature of AI, which is Irresponsibility. The AI supply-chain runs from laboratory experiment, via IR&D, to artefacts that embody the technology, to systems that incorporate the artefacts, and on to applications of those systems, and deployment in the field. Successively, researchers, inventors, innovators, purveyors, and users bear moral responsibility for disbenefits arising from AI. But the laws of many countries do not impose legal responsibility. The emergent pseudo-regulatory regime in Europe for AI actually absolves some of the players from incurring liability for their part in harmful technological innovation (Clarke 2022a).

These five generic features together mean that AI that may be effective in controlled environments (such as factories, warehouses, thinly human-populated mining sites, and distant planets) faces far greater challenges in unstructured contexts with high variability and unpredictability (e.g. public roads, households, and human care applications). In the case of human-controlled aircraft, laws impose responsibilities for collision-detection capability, for collision-avoidance functionality, and for training in the rational thought-processes to be applied when the integrity of location information, vision, communications, power, fuel or the aircraft itself is compromised. On the other hand, devising and implementing such capabilities in mostly-autonomous drones is very challenging.

To get to grips with what AI means for people, organisations, economies and societies, those generic threats have to be considered in a wide variety of different contexts. Many people have reacted in a paranoid manner, giving graphic descriptions, and using compelling imagery such as 'the robot apocalypse', 'attack drones' and 'cyborgs with runaway enhancements'. In sci-fi novels and films, sceptical and downright dystopian treatment of AI-related ideas has a long and distinguished history. Some of the key ideas can be traced through these examples:

These sources are rich and interesting in the ideas they offer (and at least to some extent prescient). However, what we really need is categories and examples grounded in real-world experience, and carefully projected into plausible futures using scenario analysis. To the extent that AI delivers on its promise, the following are natural outcomes:

To the extent that these threads continue to develop, they are likely to mutually reinforce, resulting in the removal of self-determination and meaningfulness from at least some people's lives.

This discussion has had its main focus on impacts on individuals, societies and the economy. Organisations are involved in the processes whereby the impacts arise. They are also themselves affected, both directly and indirectly. AI-derived decisions and actions that prove to have been unwise undermine the reputations of and trust in organisations that have deployed AI. That will have inevitably negative impacts on adoption, deployment success and return on investment.

The value of artefacts has been noted in 'dull, dirty and dangerous work', and where they are demonstrably more effective than humans, particularly where computational reliability and speed of inference, decision and action are vital. In such circumstances, the human race has a century of experience in automation, and careful engineering design, testing and management of AI components within such decision systems is doubtless capable of delivering some benefits. However, rational product development processes require far greater investment than is apparent to date in either impact assessment or multi-stakeholder risk assessment (Clarke 2022).

There are also contexts in which it is particularly crucial that decision-making be reserved for humans. These include circumstances that are inherent in important real-world decision-making, including complexity, uncertainty, ambiguity, variability, fluidity, value-content, multiple stakeholders and value-conflicts. See Table 1.

In all such circumstances, AI may assist in the design of decision support systems - but with important provisos. AI is essentially empirically-based, and incapable of providing explanations of an underlying rationale. So it must either be avoided, or handled very sceptically and carefully, subjected to safeguards and mitigation measures, and with controls imposed to ensure the safeguards and mitigation measures are functioning as intended.

The so-called 'precautionary principle' (Wingspread 1998) is applicable to any potentially very harmful technology and is subject to statutory provisions in environmental law. It needs to be considered for all forms of AI and autonomous artefact behaviour. At the very least, there is a need for Principles for Responsible AI to be not merely talked about, and not merely the subject of 'soft law', but actually imposed as legal requirements (Clarke 2019b, 2019c). Beyond that, particularly dangerous techniques and applications need to be subject to moratoria, which need to remain until an adequate regulatory regime is in place.

More than enough experience has been gathered about 'Old-AI' approaches, and more than enough evidence exists of their deficiencies, and the harm that they cause. The world needs an alternative way of seeing and doing 'smart' systems, and approaches that will enable both the achievement of positive outcomes and the prevention, control and mitigation of harmful impacts. This final section draws on the analysis presented earlier, provides a rationale underlying re-conception of the field, and then proposes an articulated set of ideas that are contended to deliver what we all need.

A starting-point is recognition of the fact that there are 8 billion humans already. Society gains very little by creating artefacts that think like humans. The idea of 'artificial intelligence' was mis-directed, and has resulted in a great deal of wasted effort during the last 70 years. We don't want 'artificial'; we want real. We don't want more intelligence of a human kind; we want artefacts to contribute to our intellectual endeavours. Useful intelligence in an artefact, rather than being like human intelligence, needs to be not like it, and instead constructively different from it.

One useful redefinition of 'AI' would be as 'Artefact Intelligence' (Clarke 2019a, pp.429-431). This is not as a new term. For example, it was defined in (Takeda et al. 2002, pp.1, 2) as "intelligence that maximizes functionality of the artifact", with the challenge being "to establish intentional/physical relationship between humans and artifacts". A similar term, 'artefactual intelligence', was described by De Leon (2003) as "a measure of fit among instruments, persons, and procedures taken together as an operational system".

The valuable form is usefully called 'Complementary Artefact Intelligence' (CAC), whose characteristics are:

(1) Effective performance of intellectual functions that humans do poorly or cannot do at all;

(2) Performance of those intellectual functions within systems that include both humans and artefacts; and

(3) Effective, efficient and adaptable interfacing with both humans and other artefacts.

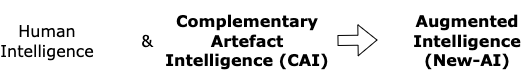

However, we need to lift our ambitions higher than Artefact Intelligence. Our focus needs to be on devising Artefact Intelligence so that it combines with Human Intelligence to deliver something new that is superior to either of them. The appropriate term for this construct is 'Augmented Intelligence' ('New-AI). By this is meant:

The integration of Human Intelligence and Complementary Artefact Intelligence into a whole that is different from, and superior to, either working alone

The idea of 'augmented intelligence' has a long and to some extent cumulative history. Ashby (1956) proposed the notion of 'intelligence amplification' and Engelbart (1962) was concerned with 'augmenting human intellect'. More recently, Zheng et al. (2017) used the cumbersome term 'Human-in-the-loop hybrid-augmented intelligence', but provides some articulation of the process. An article in the business trade press has depicted 'augmented intelligence' as "an alternative conceptualization of AI that focuses on its assistive role in advancing human capabilities" (Araya 2019).

An IEEE Division has referred to 'augmented intelligence' as using machine learning and predictive analytics not to replace human intelligence but to enhance it (IEEE-DR 2019). Computers are regarded as tools for mind extension in much the same way as tools are extensions of the body, or as extensions of the human capability of action. However, by describing the human-computer relationship using the biological term 'symbiotic', the 2019 paper risks artefacts being treated as equals with humans rather than conceiving them as being usefully different from and complementary to people. A further issue is that the paper expressly adopts the flighty transhumanism and posthumanism notions, which postulate that a transition will occur to a new species driven by technology rather than genetics.

A related line of thinking is in Wang et al. (2021), which provides a summation of the IEEE Symbiotic Autonomous Systems Initiative (SASI) (although it appears to have since been absorbed within the IEEE's Digital Reality division). The SASI initiative proposes the appropriate focus as being Symbiotic Autonomous Systems (SAS): "advanced intelligent and cognitive systems embodied by computational intelligence in order to facilitate collective intelligence among human-machine interactions in a hybrid society" (p.10). This approach is reductionist. It stands at least in contrast with the notion of socio-technical systems, and even in conflict with it. It is framed in a manner that acknowledges artefacts as at least equal members in a "hybrid society", and arguably the superior partner. The sci-fi of Isaac Asimov and Arthur C. Clarke anticipated the gradual ceding of power by humans to artefacts; but it is far from clear that contemporary humans are ready to do that, and far from clear that technology is even capable of taking on that responsibility, let alone ready to do so.

Sci-fi-originated and somewhat meta-physical, even mystical, ideas deflect attention away from the key issues. We need to move beyond the dysfunctional notion of 'old-AI'. We need to focus on artefacts as tools to support humans. Humans of the 21st century want 'Humans+', but by federating with artefacts rather than by uniting with them, or by becoming them, or by them becoming us.

Re-conception of AI from Artificiality to Augmentation is alone capable of delivering great benefits. It deters product design and application development from limiting their focus to artefacts. It demands underlying techniques that support designs for socio-technical systems, within which artefacts must embody intellectual capabilities that are distinct from those of people, and complementary to them (Abbas et al. 2021). Attention must also be paid to the effectiveness and efficiency of interactions between the people and the artefacts. The risk remains, however, that designers will think only of the humans who directly interact with the artefact ('users'), and will overlook the interests of other humans who are affected by the operation of the socio-technical system ('usees' - Clarke 1992, Fischer-Huebner & Lindskog 2001, Baumer 2015). Nonetheless, considerable improvements can be achieved through the re-discovery of technology-in-use as the proper focus of design.

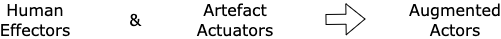

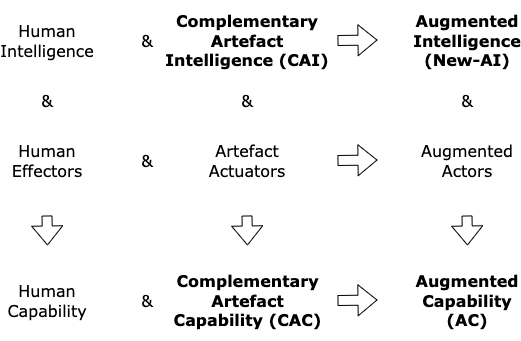

A further step remains, that extends beyond the intellectual realm. Beyond inferences and decisions lie actions. Humans act through effectors like arms and fingers. Artefacts, in order to act in the world, are designed to include actuators such as robotic arms that exert force on real-world phenomena. In the same way that Artefact Intelligence is most valuable when it is designed to complement Human Intelligence, Artefact Actuators can be developed with the aim in mind to complement Human Effectors, resulting in Augmented Actors comprising a compound of humans and machines.

The capability of action arises from the combination of the intellectual and the physical. Human Capability arose not merely from the ability to oppose forefinger and thumb, but also from the intellectual realisation that something useful can be done with things held between the two body-parts. Artefacts' direct real-world impact depends on the combination of Artefact Intelligence with suitable Actuators.

The final part of the re-conception is to prioritise Complementary Artefact Capability (CAC) that dovetails with Human Capability, resulting in a powerful form of synergy. By coordinating the intellectual plus the physical characteristics, of both humans and artefacts, we can achieve a combined capability of action superior to what either human or artefact can achieve alone. This is usefully described as Augmented Capability (AC).

The argument advanced in this article has been that the conception of 'old-AI' is inappropriate and harmful. The question remains as to what role techniques associated with 'old-AI' can play within the replacement conception of smart systems. To draw conclusions, each technique's suitability needs to be re-evaluated in terms of its fit to the new contexts of new-AI, CAI, CAC and AC.

A technique has merit if it is scientifically-based, blending theory about the real world with empirical insights; but it must be viewed sceptically and managed very carefully if it is purely empirical in nature. If an artefact, or a human examining an artefact, can explain the rationale underlying the inferences it draws, and justify any decisions it takes or actions it performs, it has a role to play in socio-technical systems; otherwise, its fitness for purpose is seriously questionable. If the Artefact Intelligence replaces Human Intelligence, as part of a decision system, its applicability is limited to purely technical systems, whereas if it augments Human Intelligence, as part of a decision support system, it may also be a valuable contributor within socio-technical systems. To the extent that a system includes actuators, if the Artefact Capability replaces Human Capability, it might be capable as part of a purely technical decision-and-action system, but its relevance to a system with substantial human or social elements is severely circumscribed. On the other hand, to the extent that the Artefact Capability complements Human Capability, the combined Augmented Capability represents a decision-and-action support system suitable for application to socio-technical systems.

This article has provided an interpretation of the past of Artificial Intelligence (old-AI) together with an analysis of artefact autonomy, in order to propose a different conception of Augmented Intelligence (new-AI), and a shift in focus beyond even that, to a more comprehensive conception, Augmented Capability (AC). Digital and intellectual technologies offer the human race enormous possibilities. The author contends that the new-AI and AC notions can quickly lead us towards appropriate ways to apply technologies while managing the potentially enormous threats that they harbour for individuals, economies and societies.

Abbas R., Pitt J. & Michael K. (2021) 'Socio-Technical Design for Public Interest Technology' Editorial, IEEE Trans. on Technology and Society 2,2 (June 2021) 55-61, at https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=9459499

Adensamer A, Gsenger R. & Klausner L.D. (2021) 'ÒComputer says noÓ: Algorithmic decision support and organisational responsibility' Journal of Responsible Technology 7-8 (October 2021) 100014, at https://www.sciencedirect.com/science/article/pii/S266665962100007X

Albus J.S. (1991) 'Outline for a theory of intelligence' IEEE Trans Syst, Man Cybern 21, 3 (1991) 473-509, at http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.410.9719&rep=rep1&type= pdf

Araya D. (2019) '3 Things You Need To Know About Augmented Intelligence' Forbes Magazine, 22 January 2019, at https://www.forbes.com/sites/danielaraya/2019/01/22/3-things-you-need-to-know-about-augmented-intelligence/?sh=5ee58aa93fdc

Ashby R. (1956) 'Design for an Intelligence-Amplifier' in Shannon C.E. & McCarthy J. (eds.) 'Automata Studies' Princeton University Press, 1956, pp. 215-234

Asimov I. (1968) 'I, Robot' (a collection of short stories originally published between 1940 and 1950), Grafton Books, London, 1968

Asimov I. (1985) 'Robots and Empire' Grafton Books, London, 1985

Baumer E.P.S. (2015) 'Usees' Proc. 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI'15), April 2015, at http://ericbaumer.com/2015/01/07/usees/

Bennett Moses L. & Chan J. (2018) 'Algorithmic prediction in policing: assumptions, evaluation, and accountability' Policing and Society 28, 7 (2018) 806-822, at https://www.tandfonline.com/doi/full/10.1080/10439463.2016.1253695

Boden M. (2016) 'AI: Its Nature and Future' Oxford University Press, 2016

Borgesius F.J.Z. (2020) 'Strengthening legal protection against discrimination by algorithms and artificial intelligence' Intl J. of Human Rights 24, 10 (2020) 1572-1593, at https://www.tandfonline.com/doi/pdf/10.1080/13642987.2020.1743976

boyd D. & Crawford K. (2011) `Six Provocations for Big Data' Proc. Symposium on the Dynamics of the Internet and Society, September 2011, at http://ssrn.com/abstract=1926431

Brunner J. (1975) 'The Shockwave Rider' Harper & Row, 1975

Capek K. (1923) 'R.U.R (Rossum's Universal Robots)' Doubleday Page and Company, 1923 (orig. published in Czech, 1918, 1921)

Cellan-Jones R. `Stephen Hawking warns artificial intelligence could end mankind' BBC News, 2 December 2014, at http://www.bbc.com/news/technology-30290540

Clarke R. (1991) 'A Contingency Approach to the Application Software Generations' Database 22, 3 (Summer 1991) 23-34, PrePrint at http://www.rogerclarke.com/SOS/SwareGenns.html

Clarke R. (1992) 'Extra-Organisational Systems: A Challenge to the Software Engineering Paradigm' Proc. IFIP World Congress, Madrid, September 1992, at http://rogerclarke.com/SOS/PaperExtraOrgSys.html

Clarke R. (2005) 'Human-Artefact Hybridisation: Forms and Consequences' Invited Presentation to the Ars Electronica 2005 Symposium on Hybrid - Living in Paradox, Linz, Austria, September 2005, PrePrint at http://rogerclarke.com/SOS/HAH0505.html

Clarke R. (2011) 'Cyborg Rights' IEEE Technology and Society 30, 3 (Fall 2011) 49-57, PrePrint at http://rogerclarke.com/SOS/CyRts-1102.html

Clarke R. (2016) 'Big Data, Big Risks' Information Systems Journal 26, 1 (January 2016) 77-90, PrePrint at http://rogerclarke.com/EC/BDBR.html

Clarke R. (2019a) 'Why the World Wants Controls over Artificial Intelligence' Computer Law & Security Review 35, 4 (Jul-Aug 2019) 423-433, at https://doi.org/10.1016/j.clsr.2019.04.006, PrePrint at http://rogerclarke.com/EC/AII.html

Clarke R. (2019b) 'Principles and Business Processes for Responsible AI' Computer Law & Security Review 35, 4 (Jul-Aug 2019) 410-422, at https://doi.org/10.1016/j.clsr.2019.04.007, PrePrint at http://rogerclarke.com/EC/AIP.html

Clarke R. (2019c) 'Regulatory Alternatives for AI' Computer Law & Security Review 35, 4 (Jul-Aug 2019) 398-409, at https://doi.org/10.1016/j.clsr.2019.04.008, PrePrint at http://rogerclarke.com/EC/AIR.html

Clarke R. (2022a) 'Responsible Application of Artificial Intelligence to Surveillance: What Prospects?' Information Polity 27, 2 (Jun 2022) 175-191, Special Issue on 'Questioning Modern Surveillance Technologies', PrePrint at http://rogerclarke.com/DV/AIP-S.html

Clarke R. (2022b) 'Evaluating the Impact of Digital Interventions into Social Systems: How to Balance Stakeholder Interests' Working Paper for ISDF, 1 June 2022, at http://rogerclarke.com/DV/MSRA-VIE.html

De Leon D. (2003) 'Artefactual Intelligence: The Development and Use of Cognitively Congenial Artefacts' Lund University Press, 2003

Dick P.K. (1968) 'Do Androids Dream of Electric Sheep?' Doubleday, 1968

Dreyfus H.L. (1972) 'What Computers Can't Do' MIT Press, 1972; Revised edition as 'What Computers Still Can't Do', 1992

Duursma (2018) 'The Risks of Artificial Intelligence' Studio OverMorgen, May 2018, at https://www.jarnoduursma.nl/the-risks-of-artificial-intelligence/

Engelbart D.C. (1962) 'Augmenting Human Intellect: A Conceptual Framework' SRI Summary Report AFOSR-3223, Stanford Research Institute, October 1962, at https://dougengelbart.org/pubs/augment-3906.html

Engstrom D.F., Ho D.E., Sharkey C.M. & Cue_llar N.-F. (2020) 'Government by Algorithm: Artificial Intelligence in Federal Administrative Agencies' NYU School of Law, Public Law Research Paper No. 20-54, April 2020, at https://ssrn.com/abstract=3551505

Fischer-Huebner S. & Lindskog H. (2001) 'Teaching Privacy-Enhancing Technologies' Proc. IFIP WG 11.8 2nd World Conference on Information Security Education, Perth, 2001, at http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.24.3950&rep=rep1&type=pdf

Forster E. M. (1909) 'The Machine Stops' Oxford and Cambridge Review, November 1909, at https://www.cs.ucdavis.edu/~koehl/Teaching/ECS188/PDF_files/Machine_stops.pdf

Gibson W. (1984) 'Neuromancer' Ace, 1984

Fuegi J. & Francis J. (2003) 'Lovelace & Babbage and the Creation of the 1843 'Notes'' IEEE Annals of the History of Computing 25, 4 (October-December 2003) 16-26, at https://www.scss.tcd.ie/Brian.Coghlan/repository/J_Byrne/A_Lovelace/J_Fuegi_&_J_Francis_2003.pdf

IEEE-DR (2019) 'Symbiotic Autonomous Systems: White Paper III' IEEE Digital Reality, November 2019, at https://digitalreality.ieee.org/images/files/pdf/1SAS_WP3_Nov2019.pdf

Kurzweil R. (2005) 'The singularity is near' Viking Books, 2005

McCarthy J. (2007) 'What is artificial intelligence?' Department of Computer Science, Stanford University, 2007, at http://www-formal.stanford.edu/jmc/whatisai/node1.html

McCarthy J., Minsky M.L., Rochester N. & Shannon C.E. (1955) 'A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence' Reprinted in AI Magazine 27, 4 (2006), at https://www.aaai.org/ojs/index.php/aimagazine/article/viewFile/1904/1802

Mack E. (2015) 'Bill Gates says you should worry about artificial intelligence' Forbes Magazine, 28 January 2015, at https://www.forbes.com/sites/ericmack/2015/01/28/bill-gates-also-worries-artificial-intelligence-is-a-threat/

Mueller V.C. (ed.) (2016) 'Risks of general intelligence' CRC Press, 2016

Pollack A. (1992) ''Fifth Generation' Became Japan's Lost Generation' The New York Times, 5 June 1992, at https://www.nytimes.com/1992/06/05/business/fifth-generation-became-japan-s-lost-generation.html

Russell S.J. & Norvig P. (2003) 'Artificial intelligence: a modern approach' 2nd edition, Prentice Hall, 2003, 3rd ed. 2009, 4th ed. 2020

Scherer M.U. (2016) 'Regulating Artificial Intelligence Systems: Risks, Challenges, Competencies, and Strategies' Harvard Journal of Law & Technology 29, 2 (Spring 2016) 354-400

Simon H.A. (1960) 'The shape of automation' Reprinted in various forms, 1960, 1965, quoted in Weizenbaum J. (1976), pp. 244-245

Simon H. A. (1996) 'The sciences of the artificial' 3rd ed., MIT Press, 1996

Smith A. (2021) 'Face scanning and `social scoring' AI can have `catastrophic effects' on human rights, UN says' The Independent, 16 September 2021, at https://www.independent.co.uk/tech/artificial-intelligence-united-nations-face-scan-social-score-b1921311.html

Stephenson N. (1995) 'The Diamond Age' Bantam Books, 1995

Sulleyman A. (2017) 'Elon Musk: AI is a "fundamental existential risk for human-civilisation" and creators must slow down' The Independent, 17 July 2017, at https://www.independent.co.uk/life-style/gadgets-and-tech/news/elon-musk-ai-human-civilisation-existential-risk-artificial-intelligence-creator-slow-down-tesla-a7845491.html

Takeda H., Terada K. & Kawamura T. (2002) 'Artifact intelligence: yet another approach for intelligent robots' Proc. 11th IEEE Int'l Wksp on Robot and Human Interactive Communication, September 2002, at http://www-kasm.nii.ac.jp/papers/takeda/02/roman2002

Wang R.Y. & Strong D.M. (1996) 'Beyond Accuracy: What Data Quality Means to Data Consumers' Journal of Management Information Systems 12, 4 (Spring, 1996) 5-33, at http://mitiq.mit.edu/Documents/Publications/TDQMpub/14_Beyond_Accuracy.pdf

Wang Y. et al. (2021) 'On the Philosophical, Cognitive and Mathematical Foundations of Symbiotic Autonomous Systems (SAS)' Phil. Trans. Royal Society (A): Math, Phys & Engg Sci. 379(219x), August 2021, at https://arxiv.org/pdf/2102.07617.pdf

Weizenbaum J. (1966) 'ELIZA--a computer program for the study of natural language communication between man and machine' Commun. ACM 9, 1 (Jan 1966) 36-45, at https://dl.acm.org/doi/pdf/10.1145/365153.365168

Weizenbaum J. (1976) 'Computer power and human reason' W.H. Freeman & Co., 1976

Wingspread (1998) '' Wingspread Statement on the Precautionary Principle, 1998, at http://sehn.org/wingspread-conference-on-the-precautionary-principle/

Yampolskiy R.V. & Spellchecker M.S. (2016) 'Artificial Intelligence Safety and Cybersecurity: a Timeline of AI Failures' arXiv, 2016, at https://arxiv.org/pdf/1610.07997

Zheng N., Liu Z., Ren P., Ma Y., Chen S., Yu S., Xue J., Chen B. & Wang F. (2017) 'Hybrid-augmented intelligence: collaboration and cognition' Frontiers of Information Technology & Electronic Engineering 18, 1 (2017) 153-179, at https://link.springer.com/article/10.1631/FITEE.1700053

The original version of this paper was prepared in response to an invitation from Prof. Vladimir Mariano, Director of the Young Southeast Asia Leaders Initiative (YSEALI) of Fulbright University Vietnam, to deliver a Distinguished Lecture to YSEALI, broadcast around South-East Asia, on 7 June 2022. My thanks to Prof. Mariano for the challenge to take 'a long view of AI', its implications, and its directions.

Roger Clarke is Principal of Xamax Consultancy Pty Ltd, Canberra. He is also a Visiting Professor associated with the Allens Hub for Technology, Law and Innovation in UNSW Law, and a Visiting Professor in the Research School of Computer Science at the Australian National University.

| Personalia |

Photographs Presentations Videos |

Access Statistics |

|

The content and infrastructure for these community service pages are provided by Roger Clarke through his consultancy company, Xamax. From the site's beginnings in August 1994 until February 2009, the infrastructure was provided by the Australian National University. During that time, the site accumulated close to 30 million hits. It passed 65 million in early 2021. Sponsored by the Gallery, Bunhybee Grasslands, the extended Clarke Family, Knights of the Spatchcock and their drummer |

Xamax Consultancy Pty Ltd ACN: 002 360 456 78 Sidaway St, Chapman ACT 2611 AUSTRALIA Tel: +61 2 6288 6916 |

Created: 20 March 2022 - Last Amended: 24 August 2022 by Roger Clarke - Site Last Verified: 15 February 2009

This document is at www.rogerclarke.com/EC/AITS-220824.html

Mail to Webmaster - © Xamax Consultancy Pty Ltd, 1995-2022 - Privacy Policy